The Unseen Bedrock: Why Data Resilience Isn’t Optional Anymore

In today’s hyper-connected, fast-paced digital era, data isn’t merely an asset; it’s the very lifeblood, the intricate nervous system, of organizations large and small. It pulses through every transaction, every customer interaction, every strategic decision, painting a comprehensive picture of your business. Lose that flow, and you risk a catastrophic systemic shutdown. That’s why World Backup Day, observed annually on March 31, serves as such a poignant, almost visceral, reminder of the absolute necessity to protect this invaluable resource. It’s more than just a date on the calendar; it’s a moment to pause, reflect, and frankly, get your ducks in a row when it comes to safeguarding your digital existence.

Think about it: from intricate customer databases and sensitive financial records to proprietary intellectual property and critical operational data, the sheer volume of information we generate and rely upon is staggering. And honestly, it’s only going to grow. But with this explosion of data comes an equally rapid expansion of threats – the digital wolves at the door, if you will. So, how do we build a fortress around this most precious commodity? We embrace a holistic approach to data resilience, starting with the tried-and-true foundations and building upwards.

Protect your data with the self-healing storage solution that technical experts trust.

The Evolving Threat Landscape: More Than Just Hard Drive Failure

Remember the good old days? When a ‘data loss event’ typically meant a crashed hard drive or an accidental deletion. Simpler times, right? Today, the threat landscape is a labyrinth of sophisticated dangers, each one more insidious than the last. You’re not just guarding against equipment failure anymore; you’re battling an invisible, ever-mutating adversary.

Ransomware, for instance, has evolved from a nuisance to a multi-billion dollar industry, holding entire organizations hostage with encrypted files. It’s like a digital pandemic, sweeping across networks, locking down everything from patient records in hospitals to manufacturing lines. And it’s not just the encryption; it’s the data exfiltration too, where they steal your data before encrypting it, threatening to leak it if you don’t pay up. It’s a double whammy, and it’s terrifying.

Then there are natural disasters. While we tend to think of cyber threats first, a hurricane, an earthquake, or even a localized fire can obliterate physical infrastructure in a heartbeat, taking your unprotected data right along with it. Your meticulously crafted spreadsheets, your innovative designs – poof, gone with the wind and the smoke. It happens, and often when you least expect it, leaving a chilling silence settling over the office.

And let’s not forget human error. This one, frankly, is often the most common culprit. An employee accidentally deletes a critical file, clicks on a phishing link, or misconfigures a server. Sometimes, it’s a simple oversight, other times it’s a moment of distraction, but the consequences can be just as devastating as a coordinated cyberattack. We’re all human, and to err is human, but in the context of data, those errors can have profound ripple effects. My old colleague, Sarah, once accidentally wiped a client’s entire project folder. We spent a frantic weekend piecing it back together from disparate versions. A nightmare, it truly was.

Even supply chain attacks are becoming more prevalent, where attackers compromise a trusted vendor’s system to then infiltrate their clients’ networks. It’s a complex web of interconnectedness, and every strand presents a potential vulnerability. So, given this complex tapestry of threats, what’s our first line of defense?

The 3-2-1 Backup Rule: A Timeless Blueprint, Modern Challenges

The 3-2-1 backup rule isn’t new, it’s practically ancient in tech terms, but its enduring relevance speaks volumes about its effectiveness. It remains a cornerstone, a golden standard, for data protection. If you’re not doing this, honestly, you’re playing a dangerous game of digital roulette.

Let’s break it down, because understanding the ‘why’ behind each component is crucial:

-

Three copies of your data: This includes your original live data, which you’re working on right now, and then two distinct backups. Why three? Because redundancy is king. If one copy fails, you still have two others. It’s like having spare tires for your spare tire. You’d think one backup would be enough, wouldn’t you? But experience tells us otherwise. Having multiple copies significantly reduces the probability of total data loss.

-

Two different storage types: This is where diversity comes in. Don’t put all your digital eggs in one basket. If you’re using only external hard drives, and they all come from the same batch with a manufacturing defect, you’re sunk. Similarly, relying solely on one cloud provider could leave you vulnerable to a service outage on their end. So, mix it up. Perhaps an external hard drive (physical storage) combined with a cloud service (offsite, network-attached storage). Or maybe a Network Attached Storage (NAS) device and tape backups. The point is to diversify your media, so a single point of failure in a storage technology doesn’t take out all your copies.

-

One copy offsite: This is your ultimate safety net against localized disasters. Imagine your office building catches fire, or there’s a flood. If all your backups are on-premises, even if they’re on different media types, they’re all vulnerable to the same physical catastrophe. Storing one copy in a geographically separate location—whether it’s another office, a secure data center, or the cloud—ensures that even if your primary site is completely destroyed, your critical data remains safe and sound. It’s that vital insurance policy that gives you peace of mind when everything else feels like it’s falling apart.

This simple, yet profoundly effective, strategy ensures redundancy and significantly enhances data resilience. But adhering to the 3-2-1 rule isn’t enough on its own; it’s the foundation upon which more sophisticated strategies are built.

A Hybrid Harmony: Weaving Cloud and Physical Storage Together

While the 3-2-1 rule sets the conceptual framework, modern businesses are increasingly adopting a hybrid backup model to achieve that perfect blend of agility, accessibility, and robust security. It’s about leveraging the best of both worlds, isn’t it?

Cloud backups have truly revolutionized data management. Platforms like Azure Backup, AWS Backup, Veeam, and Acronis offer unparalleled scalability and accessibility. You can spin up storage capacity almost instantly, paying only for what you use, and access your data from virtually anywhere with an internet connection. This accessibility is a game-changer for distributed teams and rapid recovery scenarios. Cloud solutions also typically come with built-in redundancy across multiple data centers, reducing the risk of a single point of failure within their infrastructure. However, relying solely on the cloud can have its drawbacks. Latency can be an issue for very large data sets, and depending on your connectivity, recovery times might stretch out. Plus, there are concerns about vendor lock-in and, for some, the inherent security implications of trusting a third party with highly sensitive data.

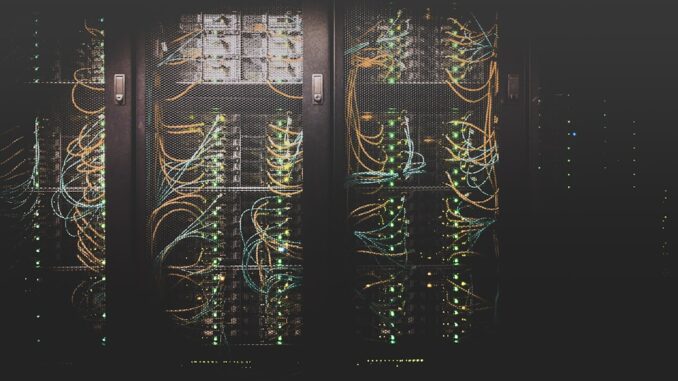

On the other hand, physical backups—utilizing devices such as NAS (Network Attached Storage) units, SAN (Storage Area Network) arrays, or even traditional external hard drives and tape drives—provide tangible, offline security. A NAS, for instance, offers robust, centralized storage within your network, allowing for quick local backups and restores. Tape, despite its ‘old-school’ reputation, remains incredibly cost-effective for long-term archival storage and provides an air-gapped solution, meaning it’s physically disconnected from the network, making it impervious to most cyberattacks like ransomware. The beauty of physical backups lies in their speed for local recovery and their complete isolation from network-borne threats. If your network is compromised, your offline physical backup remains untouched. But, of course, they require physical management, space, and are susceptible to localized disasters if not stored offsite.

The genius of the hybrid approach lies in its synergy. You might use cloud solutions for rapid recovery of frequently accessed data, ensuring business continuity with minimal downtime. For instance, if a server crashes, you can quickly restore critical applications from your cloud backup. Meanwhile, offline physical backups, perhaps on tape or an air-gapped NAS, provide an ultimate layer of protection against sophisticated cyber threats or widespread network outages. Imagine a catastrophic ransomware attack encrypting your entire network; you’d turn to those offline backups, confident they haven’t been touched. This combination offers the best of both worlds: rapid recovery through cloud accessibility and unparalleled protection against targeted cyber threats via offline storage. It’s a smart move, wouldn’t you agree?

Beyond the Backup: The Imperative of Testing and Automation

Having backups is one thing; knowing they actually work when you need them is another entirely. This is where regular testing and automation step in, transforming a mere collection of files into a truly resilient data protection strategy. If you’re not testing, you’re not prepared. It’s that simple.

Automated backup solutions are absolutely essential in today’s environment. Relying on manual processes for backups is a recipe for disaster. Human error, plain and simple, can creep in. Someone forgets a step, schedules it incorrectly, or just plain doesn’t do it. Automation, conversely, reduces this human element significantly, ensuring consistency and reliability. Tools can be configured to run backups at specific intervals – hourly, daily, weekly – without manual intervention, logging every success and failure. This frees up IT staff for more strategic tasks and drastically lowers the risk of missed backups. It’s a ‘set it and forget it’ approach, well, almost. You still need to monitor it, of course, but the heavy lifting is handled automatically.

But here’s the kicker: regular testing of those backups is crucial, perhaps even more so than the backup itself. What good is a backup if it’s corrupt, incomplete, or simply can’t be restored? I’ve seen it happen. A company thinks they’re safe, then disaster strikes, and when they go to restore, the files are either unreadable or ancient. It’s a sickening feeling, like finding your parachute has holes after you’ve jumped. Testing confirms their effectiveness during actual recovery scenarios. This isn’t just about verifying file integrity; it’s about performing full-scale restore drills. Can you restore a single file? An entire database? A whole server? And how long does it take? These tests should simulate real-world disaster scenarios. Think about different types of tests:

- Spot checks: Periodically restoring a few random files to ensure they’re accessible and uncorrupted.

- Application-level restores: Verifying that restored applications launch and function correctly with their data.

- Full system recovery tests: The big one. Actually simulating a server crash and attempting a complete bare-metal restore to different hardware or a virtual machine. This reveals potential bottlenecks, missing drivers, or configuration issues long before a real emergency.

These tests aren’t just technical exercises; they’re critical validation points for your entire data resilience plan. Document the process, record the results, and refine your plan based on what you learn. Remember, a backup that hasn’t been tested is as good as no backup at all. It might even be worse, creating a false sense of security.

Fortifying Defenses: The Power of Immutability and Air-Gapping

In the relentless face of sophisticated cyber threats, particularly ransomware, traditional backups, even if following the 3-2-1 rule, sometimes aren’t enough. Attackers are smart; they often seek out and encrypt or delete backup repositories first. This is where advanced defenses like immutable backups and air-gapping become absolutely non-negotiable.

Immutable backups are a game-changer. Imagine a backup copy that, once created, cannot be modified, encrypted, or deleted for a specified period. It’s like writing something in stone, or more accurately, on a digital write-once, read-many (WORM) medium. Even if a ransomware attack gains administrative access to your network, it can’t alter these immutable copies. They remain a pristine, uncorrupted version of your data, providing an inviolable last line of defense. This ensures data integrity and availability, even when your primary systems are completely compromised. Many cloud storage providers and modern backup solutions now offer immutable storage options. It’s a critical component of any robust ransomware recovery strategy, giving you a guaranteed clean slate to restore from.

But what if the attackers are incredibly sophisticated and manage to breach even those immutable layers? That’s where air-gapping comes into play. An air-gapped backup is a copy of your data that is physically isolated from your network. Think of it as truly unplugged. This could be data stored on tape drives that are then removed from the library and stored securely offsite, or a separate hard drive that’s only connected to the network for the duration of the backup process and then disconnected. Because there’s no continuous network connection, no malicious actor, no matter how clever, can reach it digitally. It’s the ultimate ‘break glass in case of emergency’ solution, ensuring that even in the most catastrophic network-wide compromise, you have a truly secure, untouched copy of your most vital information. It’s slow, yes, for recovery, but it’s guaranteed safe. It gives you a clean starting point, a true last resort.

These two strategies, immutability and air-gapping, are not just buzzwords; they represent critical defensive layers in the ongoing arms race against cybercriminals. They don’t replace your 3-2-1 strategy; they enhance it, providing a depth of defense that was once the exclusive domain of only the largest, most secure organizations.

Crafting Your Fortress: The Comprehensive Disaster Recovery Plan

Having robust backups is half the battle; knowing exactly how to use them to restore operations swiftly after disruptions is the other, equally critical half. This is the domain of a well-structured Disaster Recovery (DR) plan. It’s your blueprint for getting back online, a detailed roadmap for navigating chaos. Without it, even the best backups might just sit there, useless, while your business bleeds.

Developing a DR plan isn’t a one-off task; it’s an ongoing commitment that requires foresight, meticulous planning, and regular refinement. Here are the key components:

-

Business Impact Analysis (BIA): Before you can plan recovery, you need to understand what you’re recovering. A BIA identifies critical business functions and processes, assesses the impact of their disruption (financial, reputational, operational), and determines the recovery time objectives (RTO) and recovery point objectives (RPO) for each. RTO dictates the maximum acceptable downtime, while RPO defines the maximum acceptable data loss. For instance, an e-commerce site might have an RPO of minutes and an RTO of hours, whereas an internal archiving system might tolerate an RPO of a day and an RTO of several days. These metrics guide your entire DR strategy.

-

Recovery Strategies: Based on your RTOs and RPOs, you’ll select appropriate recovery strategies. This could involve simply restoring from backups, utilizing hot/warm/cold sites, or leveraging Disaster Recovery as a Service (DRaaS) providers who can quickly spin up virtual environments in the cloud. DRaaS has become incredibly popular for its scalability and cost-effectiveness, abstracting away much of the infrastructure headache.

-

Roles and Responsibilities: Who does what when disaster strikes? Clearly define roles, responsibilities, and chains of command within your DR team. Who declares a disaster? Who initiates the recovery? Who handles communications? Clarity here is paramount, avoiding confusion when emotions are high.

-

Communication Plan: This is often overlooked but utterly vital. How will you communicate with employees, customers, vendors, and stakeholders during an outage? Pre-drafted messages, alternative communication channels (e.g., status pages, emergency contact trees), and designated spokespersons are critical. A vacuum of information breeds panic and erodes trust.

-

Testing, Testing, Testing: Just like backups, a DR plan that hasn’t been tested is merely a theoretical exercise. Regular DR drills, ranging from tabletop exercises (walking through the plan conceptually) to full-scale simulations (actually attempting a failover and recovery), are essential. These tests expose weaknesses, highlight bottlenecks, and ensure your team knows their roles inside and out. It’s not enough to have a plan written down; your team needs to be able to execute it flawlessly under pressure.

Regular updates and rigorous testing of this plan are essential to maintain its effectiveness. Systems change, personnel shift, and threats evolve. Your DR plan must evolve with them, remaining a living document that safeguards your operations against potential disruptions and ensures a swift return to normalcy.

The Human Firewall: Cultivating a Culture of Data Protection

Technology, for all its brilliance, is only one part of the data resilience equation. The often-forgotten, yet arguably most critical, element is the human one. Because let’s face it, even the most sophisticated firewalls can’t stop an employee from clicking a dodgy link or inadvertently sharing sensitive information. Your employees are your first line of defense, or, regrettably, your biggest vulnerability.

Educating employees on data protection best practices isn’t a one-and-done training session; it’s an ongoing process of awareness and reinforcement. It fosters a culture of security within the organization, making data protection everyone’s responsibility, not just IT’s. This proactive approach significantly minimizes the risk of data loss due to human error, which, surprisingly, accounts for a staggering percentage of data breaches.

What does this training look like? It goes beyond just ‘don’t click weird links’ (though that’s crucial!). It should cover:

- Phishing and social engineering awareness: Teaching employees to spot suspicious emails, texts, and calls. Simulating phishing attacks can be highly effective in improving vigilance.

- Password hygiene: The importance of strong, unique passwords and the use of password managers.

- Data handling protocols: How to correctly store, share, and dispose of sensitive data. Understanding classification levels (public, internal, confidential).

- Identifying and reporting suspicious activity: Empowering employees to be vigilant and know who to contact if they suspect a breach or anomaly.

- The importance of backups: Making them understand why regular backups are vital, both for their individual work and the company’s survival. When they understand the impact, they’re more likely to follow protocols.

Remember Sarah from earlier? After her accidental data wipe, our company implemented mandatory quarterly training modules focused on data handling and backup procedures, complete with little quizzes. It sounds mundane, but it made a huge difference. Plus, we started having ‘Security Spotlight’ sessions during our weekly team meetings, sharing current threats and best practices. It shifted the mindset from ‘IT’s problem’ to ‘our collective responsibility.’

Leadership buy-in is also paramount. When management champions security awareness and invests in training, it sends a clear message down the ranks. A security-conscious culture creates a human firewall that is far more resilient than any piece of software alone. You can have all the tech in the world, but if your people aren’t on board, you’re building on shaky ground.

Regulatory Rapids and Governance Gates: Navigating Compliance

In today’s globalized economy, data resilience isn’t just about operational continuity; it’s deeply intertwined with regulatory compliance and data governance. Fail here, and you’re not just looking at downtime, you’re potentially staring down hefty fines, legal battles, and severe reputational damage. It’s a complex web of rules, and navigating it requires careful attention.

Regulations like GDPR (General Data Protection Regulation) in Europe, HIPAA (Health Insurance Portability and Accountability Act) in the US for healthcare data, and PCI DSS (Payment Card Industry Data Security Standard) for payment card data, all impose strict requirements on how organizations collect, store, process, and protect personal and sensitive information. These aren’t suggestions; they’re legal mandates. Non-compliance can result in eye-watering penalties, sometimes millions of euros or dollars, or a percentage of global revenue, whichever is higher.

Consider the implications: If you operate globally, you might be subject to multiple, sometimes conflicting, data sovereignty laws. Data generated in one country might need to stay within its borders, even for backup purposes. This directly impacts your choice of cloud providers and offsite storage locations. A robust data resilience strategy, therefore, must incorporate these legal and geographical constraints into its design.

Beyond external regulations, good data governance is about establishing internal policies and procedures for data lifecycle management. This includes:

- Data classification: Categorizing data by sensitivity (public, internal, confidential, highly confidential) to apply appropriate protection measures.

- Retention policies: Defining how long different types of data must be kept for legal, regulatory, or business reasons, and how it should be securely disposed of after its retention period.

- Access controls: Ensuring only authorized individuals have access to specific data, leveraging role-based access control (RBAC).

- Audit trails: Maintaining detailed logs of who accessed what data, when, and for what purpose, crucial for forensic analysis during an incident and for demonstrating compliance.

In essence, your data resilience strategy needs to be auditable and defensible. You need to be able to prove, at a moment’s notice, that you are meeting your obligations to protect data, whether it’s for your customers, patients, or shareholders. It’s not just about recovering from a disaster; it’s about avoiding legal and financial disasters in the first place.

The Price of Silence: Understanding the True Cost of Data Loss

When we talk about data loss, it’s easy to focus on the immediate, tangible costs: the expense of data recovery, new hardware, perhaps a ransom payment. But the true cost runs far deeper, often hitting organizations with unforeseen financial and reputational impacts that can linger for years. It’s not just a monetary hit; it’s an existential one.

Let’s break down these often-hidden costs:

Tangible Costs:

- Recovery expenses: This includes everything from hiring forensic experts and incident response teams to purchasing new equipment and software licenses. It can also involve the cost of specialized data recovery services if your backups fail.

- Legal fees and fines: As discussed, regulatory bodies don’t play around. Fines for non-compliance with GDPR, HIPAA, or other data privacy laws can be astronomical. On top of that, you might face class-action lawsuits from affected customers or employees.

- Lost revenue during downtime: Every hour your systems are down is an hour you’re not making sales, processing transactions, or serving customers. For an e-commerce giant, this can mean millions per hour. For a smaller business, it could mean missing critical deadlines or losing contracts.

- Remediation costs: This involves patching vulnerabilities, rebuilding compromised systems, and implementing new security measures to prevent future attacks. It’s an investment you might not have budgeted for, and it can be substantial.

Intangible Costs (Often More Damaging):

- Reputational damage: This is perhaps the most insidious cost. A data breach or prolonged outage can shatter customer trust and severely tarnish your brand image. Once trust is lost, it’s incredibly difficult, sometimes impossible, to regain. Think about companies whose stock prices plummeted after major breaches; the market reacts swiftly and harshly.

- Loss of customer loyalty: Customers, especially in the digital age, have little patience for businesses that can’t protect their data. They’ll flock to competitors who demonstrate better security and reliability. That means a shrinking customer base and diminished market share.

- Decline in employee morale: Your team works hard, and seeing their efforts undermined by a data disaster can be incredibly demoralizing. It can lead to increased stress, burnout, and even employee turnover.

- Erosion of competitive advantage: If your competitors remained operational while you were down, they gained ground. If your intellectual property was stolen, they might have gained an unfair advantage.

Consider the average cost of a data breach, which now often runs into the millions of dollars when all these factors are aggregated. It makes the investment in robust backup and recovery solutions, which might seem expensive upfront, look like an absolute bargain. Proactive investment in data resilience isn’t an expense; it’s an indispensable investment in your organization’s future solvency and reputation.

Looking Ahead: The Horizon of Data Resilience

The landscape of data protection isn’t static; it’s a dynamic, ever-evolving field. As new technologies emerge and threats grow more sophisticated, so too do the strategies and tools for data resilience. It’s an exciting, if sometimes dizzying, future.

Artificial Intelligence (AI) and Machine Learning (ML) are already playing a significant role. AI-powered tools can analyze vast amounts of data in real-time, identifying anomalous behaviors that might indicate a developing cyberattack long before human eyes could spot them. Think about AI identifying unusual access patterns to a backup repository or detecting ransomware encryption activity in its earliest stages. This proactive threat detection will become increasingly vital in minimizing the impact of breaches.

Blockchain technology, while often associated with cryptocurrencies, holds fascinating potential for data integrity. Its immutable, decentralized ledger system could be used to verify the authenticity and integrity of backups, creating an unalterable record of data modifications and ensuring that your recovery points haven’t been tampered with. Imagine a blockchain-verified backup, providing cryptographic proof of its untouched state; that’s a powerful assurance.

Edge computing is another area reshaping data strategy. As more data is processed closer to its source (at the ‘edge’ of the network, like IoT devices or remote offices), the challenge of protecting that distributed data intensifies. This will necessitate more localized backup solutions and sophisticated data synchronization strategies, extending the reach of data resilience far beyond the traditional data center.

And then there’s quantum computing. While still in its nascent stages, quantum’s immense processing power could theoretically break current encryption standards. This means we’ll need to develop ‘quantum-safe’ cryptographic algorithms for future data protection, a fascinating challenge that’s already being explored by researchers. It sounds like science fiction, but it’s on the horizon, and forward-thinking organizations are already keeping an eye on its implications for long-term data security.

The future of data resilience isn’t just about backups; it’s about predictive capabilities, self-healing systems, and adaptive defenses that learn and evolve alongside the threats. It’s a continuous journey, not a destination.

Conclusion: Your Data, Your Responsibility

In conclusion, as cyber threats continue their relentless evolution and the volume of critical data explodes, adopting a robust, multi-layered data resilience strategy isn’t merely a good idea; it’s an absolute imperative. It’s no longer a conversation just for the IT department, you know? It’s a boardroom-level discussion, something woven into the fabric of every department.

From diligently implementing the foundational 3-2-1 backup rule and embracing intelligent hybrid storage solutions, to rigorously testing your recovery plans and cultivating a strong security-aware culture among your employees – every step counts. Ensuring data immutability and integrating sophisticated automation tools fortifies your defenses, providing that crucial peace of mind in an unpredictable world. Furthermore, understanding the intricate web of regulatory compliance and the true, often hidden, costs of data loss underscores the gravity of this challenge. And as we gaze into the future, it’s clear that continuous adaptation, driven by innovation, will be key to staying ahead.

By taking proactive, comprehensive measures, organizations can ensure data integrity, maintain unwavering availability, and safeguard their entire operational framework against potential disruptions. Your data isn’t just a collection of bits and bytes; it’s the story of your business, and frankly, its future. Protect it like your business depends on it, because, truly, it does.

References

- World Backup Day. (en.wikipedia.org)

- Your backup plan for lost or corrupted data. (acronis.com)

- Data Resilience: What is It and Why is It Important. (commvault.com)

- 4 Cyber Resilience Tips to Improve Data Resilience. (crashplan.com)

- Seven Best Practices For Your Data Resilience Strategy. (explore.business.bell.ca)

The discussion around the human element in data protection is spot on. How are organizations successfully measuring the effectiveness of their security awareness training programs to ensure lasting behavioral changes and a true culture of data protection?

That’s a crucial point! Measuring the impact of security awareness programs is definitely a challenge. Some organizations use simulated phishing campaigns and track click-through rates before and after training. Another method is incorporating security-related questions into employee performance reviews and tracking improvements over time, fostering a security mindset.

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The discussion of air-gapping highlights a practical approach to data security, especially against ransomware. Given the increasing sophistication of cyberattacks, how do organizations balance the need for air-gapped backups with the desire for quick and efficient data recovery in the event of a disaster?

That’s an excellent question! The balance truly lies in a well-orchestrated hybrid approach. While air-gapping offers robust protection, it can slow down recovery. Many orgs are now using automated tools that periodically ‘snapshot’ data to immutable storage for quicker restores, alongside less frequent, but fully air-gapped backups for worst-case scenarios. The key is striking a balance that aligns with specific RTOs and RPOs.

Editor: StorageTech.News

Thank you to our Sponsor Esdebe