Abstract

Non-Volatile Memory Express (NVMe) Solid State Drives (SSDs) have revolutionized data storage, offering significant performance improvements over traditional Hard Disk Drives (HDDs) and Serial Attached SCSI (SAS) SSDs. This report provides a comprehensive analysis of NVMe SSDs, exploring their architectural underpinnings, performance characteristics, endurance limitations, cost considerations, and comparisons to competing storage technologies. The report delves into the intricacies of various NVMe form factors, interfaces, and protocols, examining their suitability for diverse workloads ranging from high-performance computing (HPC) and data centers to edge computing applications. Furthermore, it investigates common failure modes and explores advanced data recovery techniques specific to NVMe SSDs. Finally, it discusses emerging trends in NVMe technology, including the adoption of Computational Storage, Key Value SSDs, and disaggregated architectures, highlighting their potential to shape the future of data storage.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

1. Introduction

Solid-state drives (SSDs) have become ubiquitous in modern computing environments, driven by the increasing demand for faster data access and reduced latency. Among SSD technologies, NVMe SSDs stand out due to their superior performance capabilities compared to legacy interfaces like SATA and SAS. Leveraging the PCIe bus and the NVMe protocol, these drives offer significantly higher bandwidth, lower latency, and improved Input/Output Operations Per Second (IOPS), enabling substantial improvements in application performance and overall system responsiveness. This report will provide an in-depth exploration of NVMe SSD technology, addressing its underlying architecture, performance characteristics, reliability concerns, and future technological advancements.

The NVMe specification was designed from the ground up to exploit the parallelism inherent in NAND flash memory, unlike older protocols that were originally developed for spinning disks. This allows NVMe SSDs to efficiently handle multiple concurrent I/O requests, resulting in significantly higher throughput and lower latency. The adoption of NVMe has been accelerated by the decreasing cost of flash memory and the increasing demands of modern applications, such as artificial intelligence, machine learning, and big data analytics, which require rapid access to massive datasets.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

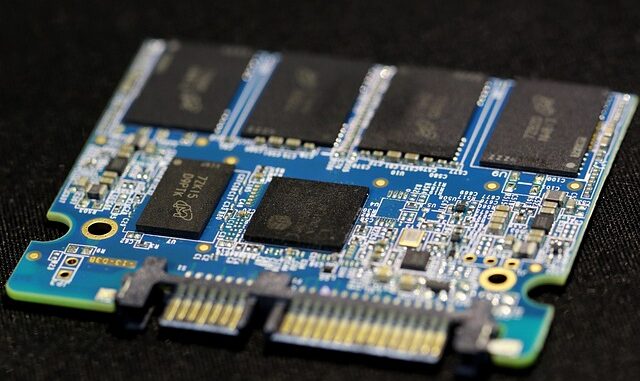

2. Architecture of NVMe SSDs

The architectural design of NVMe SSDs is critical to understanding their performance capabilities. Key architectural components include the controller, flash memory, DRAM cache (optional), and the host interface.

2.1. Controller

The NVMe controller is the central processing unit of the SSD, responsible for managing all operations, including command processing, data transfer, error correction, and wear leveling. Modern NVMe controllers are typically multi-core processors with dedicated hardware accelerators for tasks such as encryption and data compression. The controller’s efficiency is a primary determinant of the overall drive performance.

Unlike SATA and SAS controllers, which are limited by the constraints of their respective protocols, NVMe controllers communicate directly with the host CPU via the PCIe bus. This direct connection eliminates the overhead associated with legacy interfaces, reducing latency and increasing throughput. Furthermore, NVMe controllers support a large number of parallel queues, allowing them to handle multiple I/O requests concurrently. This parallel processing capability is essential for achieving high IOPS.

The number of channels connected to the flash memory is another crucial aspect of the controller design. More channels allow for greater parallelism in data transfer, leading to higher bandwidth. High-performance NVMe SSDs typically employ controllers with a large number of channels to maximize throughput.

2.2. Flash Memory

The flash memory is the non-volatile storage medium where data is physically stored. NVMe SSDs primarily utilize NAND flash memory, which is available in various cell types, including Single-Level Cell (SLC), Multi-Level Cell (MLC), Triple-Level Cell (TLC), and Quad-Level Cell (QLC). Each cell type offers different trade-offs between performance, endurance, and cost.

- SLC: Provides the highest performance and endurance but is also the most expensive. Typically used in enterprise-grade SSDs where reliability is paramount.

- MLC: Offers a balance between performance, endurance, and cost. Commonly used in high-end consumer and professional SSDs.

- TLC: Provides higher storage density at a lower cost but offers lower performance and endurance compared to SLC and MLC. Widely used in mainstream consumer SSDs.

- QLC: Offers the highest storage density and lowest cost per bit but suffers from the lowest performance and endurance. Primarily used in cost-sensitive applications.

The choice of flash memory cell type significantly impacts the overall performance and lifespan of the SSD. Advanced error correction codes (ECC) and wear-leveling algorithms are employed to mitigate the limitations of lower-endurance flash memory technologies like TLC and QLC.

2.3. DRAM Cache

Many NVMe SSDs include a DRAM cache to improve performance by buffering frequently accessed data. The DRAM cache acts as a temporary storage area for both read and write operations, reducing the need to access the slower flash memory directly. This can significantly improve latency and IOPS, particularly for random read workloads.

However, the use of DRAM cache introduces a potential data loss risk in the event of a power failure. To mitigate this risk, some SSDs incorporate power loss protection (PLP) circuitry, which utilizes capacitors to provide sufficient power to flush the DRAM cache to the flash memory in the event of a power outage. SSDs without a DRAM cache do not have this vulnerability.

2.4. Host Interface

NVMe SSDs connect to the host system via the PCIe bus, providing a high-bandwidth interface for data transfer. Different generations of PCIe offer varying levels of performance. PCIe Gen3 provides a maximum bandwidth of 8 GT/s per lane, while PCIe Gen4 doubles the bandwidth to 16 GT/s per lane. PCIe Gen5 further increases the bandwidth to 32 GT/s per lane. Current systems support up to PCIe Gen 5, and Gen6 is emerging.

The number of PCIe lanes also affects the overall performance. NVMe SSDs typically utilize x4 lanes, providing a theoretical maximum bandwidth of 32 GB/s for PCIe Gen4 and 64 GB/s for PCIe Gen5. The actual achievable bandwidth may be lower due to protocol overhead and other system limitations.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

3. Performance Characteristics

The performance of NVMe SSDs is characterized by several key metrics, including latency, throughput, and IOPS.

3.1. Latency

Latency refers to the time it takes for the SSD to respond to a read or write request. NVMe SSDs offer significantly lower latency compared to traditional HDDs and SATA SSDs. Typical latency values for NVMe SSDs range from tens of microseconds to a few hundred microseconds, whereas HDDs typically exhibit latencies in the milliseconds range. This reduction in latency is crucial for improving the responsiveness of applications and reducing overall system wait times.

3.2. Throughput

Throughput, also known as bandwidth, refers to the rate at which data can be transferred between the SSD and the host system. NVMe SSDs offer significantly higher throughput compared to SATA SSDs. High-performance NVMe SSDs can achieve sequential read and write speeds of several gigabytes per second, whereas SATA SSDs are limited by the SATA interface bandwidth. PCIe Gen5 SSDs are pushing this even further, with theoretical sequential read speeds surpassing 10 GB/s.

3.3. IOPS

IOPS (Input/Output Operations Per Second) measures the number of read or write operations that the SSD can perform per second. NVMe SSDs excel in IOPS performance due to their ability to handle multiple concurrent I/O requests. High-performance NVMe SSDs can achieve hundreds of thousands or even millions of IOPS, whereas HDDs are typically limited to a few hundred IOPS. This high IOPS performance is essential for applications that involve a large number of small random reads and writes, such as database servers and virtualized environments.

The IOPS performance of an NVMe SSD is influenced by several factors, including the controller’s processing power, the number of flash memory channels, and the queue depth. Higher queue depths allow the SSD to handle more concurrent I/O requests, resulting in higher IOPS.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

4. Endurance and Reliability

Endurance refers to the amount of data that can be written to an SSD before it reaches the end of its lifespan. SSD endurance is typically measured in Terabytes Written (TBW) or Drive Writes Per Day (DWPD). The endurance of an SSD is primarily determined by the type of flash memory used and the wear-leveling algorithms employed by the controller.

4.1. Flash Memory Endurance

The endurance of flash memory decreases with each program/erase (P/E) cycle. SLC flash memory offers the highest endurance, followed by MLC, TLC, and QLC. Enterprise-grade SSDs typically utilize SLC or MLC flash memory to ensure high endurance, whereas consumer-grade SSDs often utilize TLC or QLC flash memory.

4.2. Wear Leveling

Wear leveling is a technique used to distribute write operations evenly across all flash memory blocks, preventing any single block from being overused. Advanced wear-leveling algorithms can significantly extend the lifespan of SSDs, particularly those utilizing lower-endurance flash memory technologies like TLC and QLC.

4.3. Error Correction

Error correction codes (ECC) are used to detect and correct errors that may occur in flash memory cells. Modern NVMe SSDs employ sophisticated ECC algorithms, such as Low-Density Parity-Check (LDPC) codes, to ensure data integrity and reliability.

4.4. Over-Provisioning

Over-provisioning refers to the practice of reserving a portion of the SSD’s total storage capacity for internal use, such as wear leveling and bad block management. Over-provisioning can improve the endurance and performance of SSDs, particularly under heavy workloads.

4.5. Failure Modes

Common failure modes for NVMe SSDs include:

- Flash Memory Degradation: As flash memory cells are repeatedly programmed and erased, they gradually degrade, eventually leading to data loss.

- Controller Failure: The NVMe controller can fail due to hardware defects or software errors.

- Power Failure: Sudden power outages can corrupt data stored in the DRAM cache (if present) and potentially damage the SSD’s internal circuitry.

- Firmware Bugs: Firmware bugs can lead to data corruption or drive instability.

4.6. Data Recovery

Data recovery from failed NVMe SSDs can be challenging due to the complex architecture and encryption technologies employed. Traditional data recovery techniques used for HDDs are often ineffective for SSDs. Specialized data recovery tools and techniques are required to access and recover data from damaged NVMe SSDs.

Data recovery from failed NVMe SSDs can involve:

- Chip-off Recovery: Removing the flash memory chips from the SSD and reading the data directly using specialized equipment.

- Controller Emulation: Recreating the SSD’s controller functionality in software to access the data.

- Forensic Analysis: Analyzing the SSD’s firmware and data structures to identify and recover lost data.

The success of data recovery depends on the severity of the damage and the expertise of the data recovery specialists.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

5. Form Factors, Interfaces, and Protocols

NVMe SSDs are available in various form factors, interfaces, and protocols, each offering different advantages and disadvantages.

5.1. Form Factors

- U.2 (SFF-8639): A 2.5-inch form factor typically used in enterprise environments. U.2 SSDs offer high capacity and performance but require a specific U.2 connector.

- M.2 (NGFF): A small form factor widely used in laptops, desktops, and embedded systems. M.2 SSDs are available in various lengths and widths, with 2280 (22mm wide, 80mm long) being the most common size. Supports both SATA and NVMe protocols, so it’s essential to check compatibility.

- Add-in Card (AIC): A PCIe card that plugs directly into a PCIe slot. AIC SSDs offer the highest performance and capacity but require a dedicated PCIe slot.

- EDSFF (Enterprise and Data Center Standard Form Factor): A family of form factors designed for high-density storage in data centers. EDSFF includes various sizes and shapes, offering flexibility in system design.

5.2. Interfaces

- PCIe Gen3/4/5: NVMe SSDs utilize the PCIe bus for data transfer. PCIe Gen3 offers a maximum bandwidth of 8 GT/s per lane, while PCIe Gen4 doubles the bandwidth to 16 GT/s per lane. PCIe Gen5 doubles it again to 32 GT/s. The number of PCIe lanes also affects the overall performance. NVMe SSDs typically utilize x4 lanes.

5.3. Protocols

- NVMe (Non-Volatile Memory Express): A high-performance protocol designed specifically for SSDs. NVMe offers significantly lower latency and higher throughput compared to legacy protocols like SATA and SAS.

- NVMe over Fabrics (NVMe-oF): An extension of the NVMe protocol that allows NVMe SSDs to be accessed over a network. NVMe-oF enables the creation of shared storage pools and disaggregated storage architectures. Common transports for NVMe-oF include RDMA (Remote Direct Memory Access) over Converged Ethernet (RoCE), Fibre Channel (FC), and TCP.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

6. Suitability for Various Workloads

NVMe SSDs are well-suited for a wide range of workloads, including:

- High-Performance Computing (HPC): NVMe SSDs provide the high throughput and low latency required for HPC applications, such as scientific simulations and data analysis.

- Data Centers: NVMe SSDs enable faster application performance, improved server utilization, and reduced latency in data center environments.

- Enterprise Storage: NVMe SSDs offer the performance and reliability required for enterprise storage applications, such as databases, virtualized environments, and cloud computing.

- Gaming: NVMe SSDs reduce game loading times and improve overall gaming performance.

- Video Editing: NVMe SSDs enable faster video editing and rendering.

- AI/ML: High-speed data access crucial for training large models. NVMe-based solutions greatly improve training times.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

7. Cost Considerations

The cost of NVMe SSDs has decreased significantly in recent years, making them more affordable for a wider range of applications. However, NVMe SSDs are still generally more expensive than traditional HDDs. The cost per gigabyte of NVMe SSDs varies depending on the flash memory type, capacity, and performance. The total cost of ownership (TCO) should also be considered, taking into account factors such as power consumption, cooling requirements, and maintenance costs. The improved performance and reduced latency often justify the higher initial cost of NVMe SSDs, particularly for performance-critical applications. In addition, the endurance of NVMe SSDs is steadily improving which should be factored into the calculation.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

8. Emerging Trends

Several emerging trends are shaping the future of NVMe SSD technology.

8.1. Computational Storage

Computational Storage integrates processing capabilities directly into the SSD, allowing data to be processed closer to the storage device. This can reduce data transfer overhead and improve overall system performance. Computational Storage is particularly well-suited for applications such as data analytics, image processing, and machine learning.

8.2. Key Value SSDs

Key Value SSDs store data as key-value pairs, eliminating the need for traditional block-based storage. This can improve performance and efficiency for applications that utilize key-value data models, such as NoSQL databases and object storage systems.

8.3. Zoned Namespaces (ZNS)

Zoned Namespaces (ZNS) is a specification that divides the SSD into zones, each of which can only be written sequentially. This allows for more efficient data management and can improve the endurance and performance of the SSD. ZNS SSDs are well-suited for write-intensive workloads, such as log aggregation and database journaling.

8.4. Disaggregated Storage

Disaggregated storage architectures separate storage resources from compute resources, allowing for greater flexibility and scalability. NVMe-oF enables the creation of disaggregated storage pools, allowing applications to access storage resources over a network. This can improve resource utilization and reduce infrastructure costs.

8.5. PCIe Gen6 and Beyond

The development of PCIe Gen6 and future generations will continue to drive performance improvements in NVMe SSDs. These faster interfaces will enable even higher bandwidth and lower latency, further expanding the capabilities of NVMe technology. Expect PCIe Gen 6 to appear in high-end server products in late 2024.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

9. Conclusion

NVMe SSDs have become the dominant storage technology for a wide range of applications, offering significant performance improvements over traditional HDDs and SATA SSDs. The NVMe protocol, combined with the PCIe bus, enables high throughput, low latency, and improved IOPS, making NVMe SSDs well-suited for performance-critical workloads. As the cost of flash memory continues to decrease and new technologies such as Computational Storage and NVMe-oF emerge, NVMe SSDs will continue to play an increasingly important role in modern computing environments.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

References

- NVM Express, Inc. (n.d.). NVM Express. Retrieved from https://nvmexpress.org/

- SNIA (Storage Networking Industry Association). (n.d.). SNIA. Retrieved from https://www.snia.org/

- Flash Memory Summit. (n.d.). Retrieved from https://www.flashmemorysummit.com/

- Hennessy, J. L., & Patterson, D. A. (2017). Computer Architecture: A Quantitative Approach (6th ed.). Morgan Kaufmann.

- Tanenbaum, A. S., & Bos, H. (2015). Modern Operating Systems (4th ed.). Pearson.

- Lott, C., & Leventhal, G. (2020). Understanding Storage: A Comprehensive Guide. Addison-Wesley Professional.

- Doucette, J. (2023, October 30). PCIe 6.0 specification: What is it, speed, and benefits. TechTarget. https://www.techtarget.com/searchstorage/definition/PCIe-6-0-specification

Given the increasing density of QLC flash memory, what are the practical limitations in terms of write endurance for sustained workloads in enterprise environments, and how can these be effectively mitigated?

That’s a great question! The endurance challenges with QLC in enterprise settings are significant, but techniques like advanced wear leveling and over-provisioning are helping a lot. I think the key is workload analysis and intelligent data placement. Has anyone had practical experience optimizing QLC for specific enterprise applications?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

Given the increasing adoption of NVMe-oF for disaggregated storage, what are the primary challenges in ensuring consistent performance and low latency across network fabrics, particularly when dealing with varying network congestion and distances?

That’s a great point! As NVMe-oF adoption grows, managing network fabric performance is crucial. We’ve seen congestion and distance significantly impact latency. Robust QoS mechanisms and adaptive routing protocols are definitely key to mitigating these challenges. It’s an evolving area and worth further research!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The report mentions advanced data recovery for NVMe SSDs. Could you elaborate on the cost-effectiveness and practicality of these specialized techniques for smaller businesses versus large enterprises?

That’s a really important consideration! While advanced data recovery methods are powerful, the cost can be a barrier. For smaller businesses, cloud backups and robust redundancy strategies might be more practical and cost-effective initially. Larger enterprises often have dedicated IT and budget for specialized recovery services. What are your thoughts on balancing cost vs. recovery capabilities?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The report highlights the impact of NVMe SSDs on AI/ML workloads. How might computational storage, by reducing data movement, further accelerate model training and inference, especially with the increasing size and complexity of datasets?

That’s an excellent question! The potential for computational storage to boost AI/ML is huge. By processing data closer to the source, we can minimize bottlenecks and drastically cut down on data movement, especially as datasets get larger. It will be interesting to see what new hardware will be produced! Are there any resources that you’d recommend to stay updated on trends?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

So, we’re just casually revolutionizing data storage now? Disaggregated storage sounds promising, but what happens when the network gremlins decide to throw a party? Latency becomes the new bottleneck, perhaps?

That’s a really important point! You’re right, network reliability is paramount for disaggregated storage. Redundancy and robust network protocols are critical to mitigate potential latency issues. The industry is exploring advanced techniques such as path aggregation to improve resilience. What are some network monitoring tools you think are essential?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

“Revolutions are messy! Wondering when computational storage will stop being ’emerging’ and actually, you know, emerge. Anyone seen it in action outside a lab?”

That’s a great question! While widespread adoption is still on the horizon, computational storage is gaining traction in edge computing and AI inference applications. The ability to process data locally significantly reduces latency and bandwidth usage. Have you encountered any specific use cases that you would find beneficial?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

NVMe drives improving gaming performance? Finally, a valid excuse for my abysmal K/D ratio. Blaming lag is so last decade.

Haha, that’s great! It’s always good to have a scapegoat, right? But seriously, NVMe drives *can* make a noticeable difference in load times and overall responsiveness, which *could* translate to a slight edge. What games do you play the most? I wonder how they specifically benefit!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe