Abstract

Hyper-Converged Infrastructure (HCI) has fundamentally reshaped the landscape of data center architecture, moving beyond traditional siloed approaches to a unified, software-defined platform that seamlessly integrates compute, storage, and networking resources. This comprehensive research report meticulously examines the foundational architectural principles underpinning HCI, delving into concepts such as software-defined storage (SDS), distributed data planes, and advanced virtualization layers. It provides an in-depth comparative analysis of leading vendor solutions, including Nutanix, VMware vSAN, HPE SimpliVity, and Dell EMC VxRail, highlighting their unique value propositions, technical differentiators, and strategic market positioning. Furthermore, the report outlines pragmatic implementation strategies, from initial needs assessment and pilot deployments to full-scale rollout and ongoing lifecycle management, emphasizing best practices for successful adoption. A significant portion is dedicated to exploring the multifaceted benefits of HCI, encompassing substantial cost savings through reduced capital and operational expenditures, marked improvements in operational efficiency via simplified management and automation, and enhanced agility across a diverse spectrum of workloads. Specific attention is given to the utility of HCI in critical applications such as Virtual Desktop Infrastructure (VDI), high-performance database management, resilient Remote Office/Branch Office (ROBO) deployments, and emerging use cases in edge computing and hybrid cloud environments. By synthesizing these facets, the report offers a holistic understanding of HCI’s transformative role in modernizing enterprise IT infrastructure, driving innovation, and enabling business responsiveness in an increasingly data-intensive world.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

1. Introduction: The Evolution of Data Center Architectures Towards Convergence

The trajectory of enterprise IT infrastructure has been a continuous quest for greater efficiency, scalability, and agility. For decades, data centers were characterized by a siloed architecture: distinct, independently managed components for compute (servers), storage (Storage Area Networks or Network-Attached Storage), and networking (switches, routers, firewalls). This traditional approach, while foundational for its time, progressively revealed significant limitations as business demands for rapid provisioning, data growth, and application performance escalated. Organizations frequently contended with complex integration challenges, vendor lock-in, resource underutilization, escalating operational costs, and protracted deployment cycles for new services.

Responding to these challenges, the concept of Converged Infrastructure (CI) emerged, aiming to pre-integrate compute, storage, and networking into a single, pre-validated solution from a single vendor. While CI offered a degree of simplification and reduced integration risk, it typically relied on distinct hardware components managed separately within a converged stack, often lacking the deep software integration needed for true flexibility and scalability.

Hyper-Converged Infrastructure (HCI) represents the next evolutionary leap in data center architecture. First gaining significant traction in the early 2010s, HCI takes the principles of convergence to a software-defined extreme. It collapses core data center functions—compute, storage, and networking—into a unified, software-centric platform running on industry-standard x86 servers. This profound integration simplifies management, enhances scalability, optimizes resource utilization, and fundamentally transforms the operational model of IT. By abstracting the underlying hardware and managing resources through a unified software layer, HCI enables IT organizations to provision and scale infrastructure with unprecedented speed and simplicity, making it a pivotal technology in contemporary digital transformation initiatives.

This report will meticulously explore the foundational architectural paradigms of HCI, dissect the offerings of prominent vendors, detail effective implementation strategies, and enumerate the profound benefits HCI confers upon a diverse array of enterprise workloads, ultimately solidifying its position as a cornerstone of modern IT infrastructure.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

2. Architectural Principles of Hyper-Converged Infrastructure: The Software-Defined Foundation

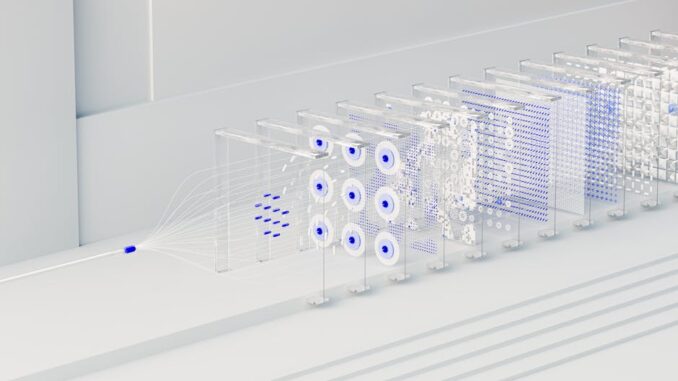

HCI is not merely a bundling of hardware components; it is a profound architectural shift driven by software. At its core, HCI transforms commodity hardware into an intelligent, distributed system by abstracting and pooling resources through robust software layers. This software-defined approach is what differentiates HCI from traditional or even converged infrastructure, enabling unparalleled flexibility and scalability.

2.1 Core Components and Software-Defined Layers

At the heart of every HCI solution lie three integrated software layers that work in concert:

-

Virtualization Layer (Hypervisor): This is the foundational layer upon which HCI is built. A hypervisor (such as VMware ESXi, Microsoft Hyper-V, Nutanix AHV, or KVM) abstracts the physical compute resources (CPU, RAM) and provides the platform for virtual machines (VMs) and the HCI control plane. In an HCI context, the hypervisor not only hosts user workloads but also typically runs a vital component known as the Storage Controller Virtual Machine (CVM) or Storage Virtual Appliance (SVA) on each node. This CVM/SVA is responsible for managing the local storage and presenting it as a distributed, shared pool across the cluster.

-

Software-Defined Storage (SDS): SDS is arguably the most transformative component of HCI. It virtualizes the storage resources across all nodes in the cluster, aggregating their local direct-attached storage (DAS) into a single, logical pool. This eliminates the need for expensive, complex Fibre Channel SANs or traditional NAS arrays. Key characteristics and functions of SDS within HCI include:

- Distributed File System (DFS): Unlike traditional storage that uses a centralized array, HCI employs a distributed file system that spans all nodes. This allows data to be written and read from any node in the cluster, facilitating data locality and high availability.

- Data Locality: A fundamental principle in many HCI designs, data locality means that whenever possible, a virtual machine’s data resides on the same node as the VM itself. This significantly reduces network latency between the compute and storage, enhancing application performance. When a VM moves (e.g., via vMotion), its data can follow or be accessed efficiently from other nodes.

- Intelligent Data Services: HCI’s SDS layer provides a rich set of data services natively, often eliminating the need for separate appliances or software. These include:

- Deduplication: Eliminates redundant copies of data blocks, saving storage space.

- Compression: Reduces the size of data blocks, further improving storage efficiency.

- Erasure Coding/Replication: Ensures data durability and resilience against node or disk failures. Replication (e.g., 2-copy or 3-copy) creates exact copies of data on different nodes, while erasure coding uses parity information to reconstruct data, offering similar protection with potentially greater storage efficiency for larger clusters.

- Snapshots and Clones: Instantaneous, space-efficient point-in-time copies of VMs or data volumes, crucial for backup, disaster recovery, and test/development environments.

- Tiering: Automatically moves data between different storage media (e.g., flash for hot data, HDD for cold data) based on access patterns, optimizing performance and cost.

-

Software-Defined Networking (SDN) & Network Virtualization: While often less prominently advertised as a core ‘component’ compared to SDS and the hypervisor, robust network virtualization and integration are critical for HCI’s success. SDN principles enable centralized control over network configurations, enhancing flexibility and responsiveness within the HCI cluster. This includes:

- Virtual Switches: Managing network traffic between VMs and to the physical network.

- Micro-segmentation: Isolating individual VMs or applications from each other at a granular level, significantly enhancing security by preventing lateral movement of threats.

- Quality of Service (QoS): Prioritizing network traffic for critical applications to ensure consistent performance.

- Automated Network Provisioning: Integrating network configuration with VM deployment processes.

2.2 Node-Based Architecture and Distributed Principles

HCI systems are fundamentally built upon a modular, node-based architecture. Each physical node is a self-contained unit comprising compute (CPU, RAM), local direct-attached storage (SSDs, HDDs, or a hybrid combination), and network connectivity. This design facilitates several crucial architectural advantages:

-

Linear Scalability (Scale-Out Model): Organizations can incrementally add nodes to an existing HCI cluster. As new nodes are added, both compute and storage capacity, as well as performance, expand proportionally. This ‘pay-as-you-grow’ model eliminates the need for expensive, disruptive forklift upgrades common in traditional infrastructure, allowing businesses to align IT spend directly with business growth.

-

High Availability and Resilience: The distributed nature of HCI ensures exceptional resilience. Data is replicated or erasure-coded across multiple nodes in the cluster. In the event of a single node or disk failure, the system automatically self-heals by leveraging redundant data copies on other nodes, minimizing downtime and ensuring continuous workload operation. This inherent fault tolerance is a significant advantage over single points of failure in traditional environments.

-

Shared-Nothing Architecture: Many HCI solutions employ a ‘shared-nothing’ architecture, meaning each node is independent and possesses its own local resources. While resources are logically pooled and presented as a single entity, there is no single shared storage backplane or controller that could become a bottleneck or single point of failure. This design contributes significantly to performance and resilience.

2.3 Centralized Management and Automation

A defining characteristic of HCI is its unified, centralized management platform, typically delivered via a web-based interface. This single pane of glass provides comprehensive control and visibility over all compute, storage, and networking resources within the HCI cluster. Key benefits of this approach include:

-

Simplified Operations: Administrators no longer need to manage disparate systems (servers, SANs, network switches) with separate tools. The unified interface drastically reduces operational complexity, allowing IT teams to focus on higher-value strategic initiatives rather than routine infrastructure maintenance.

-

Automation and Orchestration: HCI platforms are designed with automation in mind. Built-in automation features streamline mundane and time-consuming tasks such as provisioning new VMs, scaling resources, patching, upgrades, and even troubleshooting. Many platforms offer robust APIs that allow integration with broader IT automation and orchestration tools (e.g., Ansible, Terraform), enabling an ‘infrastructure-as-code’ approach.

-

Predictive Analytics and AI Operations (AIOps): Advanced HCI solutions integrate analytics capabilities that monitor performance, resource utilization, and health across the cluster. These tools can leverage machine learning to provide predictive insights, identify potential bottlenecks before they impact performance, and suggest optimization strategies, moving IT from reactive problem-solving to proactive management.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

3. Comparison of Leading HCI Vendor Solutions: A Landscape of Innovation

The Hyper-Converged Infrastructure market is dynamic and competitive, populated by several vendors offering distinct solutions tailored to various enterprise needs and existing IT ecosystems. While all HCI solutions adhere to the core architectural principles, their implementations, feature sets, and integration strategies vary significantly. This section provides a detailed comparative analysis of the leading players.

3.1 Nutanix: The Pioneer and Market Leader

Nutanix is widely recognized as the pioneer of the HCI market, offering a comprehensive and robust solution that has evolved into a full-stack enterprise cloud platform. Their core offering, the Nutanix Enterprise Cloud OS, converges compute, storage, and networking into a single platform.

- Core Technology: At the heart of Nutanix is the Acropolis Operating System (AOS), which runs on each node. AOS includes the Distributed Storage Fabric (DSF), a highly resilient and scalable software-defined storage layer. DSF aggregates local storage from all nodes into a unified pool, providing advanced data services like deduplication, compression, erasure coding, snapshots, and replication.

- Hypervisor Flexibility: A key differentiator for Nutanix is its multi-hypervisor support. While it has its own highly efficient, KVM-based Acropolis Hypervisor (AHV), it also seamlessly supports VMware vSphere and Microsoft Hyper-V. This flexibility allows organizations to leverage existing hypervisor investments or adopt AHV for simplified management and potentially lower licensing costs.

- Management: Nutanix Prism is the intuitive, centralized management console that provides a single pane of glass for monitoring, managing, and automating all aspects of the HCI cluster, from VMs to storage and network settings. Prism Central extends management capabilities across multiple clusters and geographies.

- Integrated Data Services: Nutanix boasts a rich array of integrated data services. Beyond standard features, it offers synchronous and asynchronous replication for disaster recovery (including Metro Availability for zero RPO/RTO across data centers), integrated backup, and native support for files (Nutanix Files) and objects (Nutanix Objects), further reducing the need for separate infrastructure.

- Scalability: Nutanix provides extreme flexibility in scaling, allowing organizations to add nodes incrementally to scale both compute and storage independently, or to add GPU-enabled nodes for specialized workloads. Their ‘Web-Scale’ architecture ensures linear performance growth.

- Cloud Integration: Nutanix has expanded its vision to the hybrid cloud with Nutanix Clusters on public clouds (AWS, Azure) and Xi Cloud Services offering DR-as-a-Service and other cloud-native capabilities.

3.2 VMware vSAN: Software-Defined Storage for the vSphere Ecosystem

VMware vSAN is a software-defined storage solution that is natively integrated with VMware vSphere, leveraging existing VMware investments and expertise. It transforms direct-attached storage into a shared data store for vSphere clusters.

- Core Technology: vSAN runs as a distributed layer directly within the ESXi hypervisor on each node. It pools the local disk resources of the ESXi hosts to create a single, shared datastore accessible by all VMs in the cluster. This tight integration means no separate Controller VM is required, as the storage logic is part of the hypervisor kernel.

- Policy-Based Management: A hallmark of vSAN is its Storage Policy-Based Management (SPBM). Administrators define storage policies based on application requirements (e.g., performance tiers, availability levels, RAID configurations, deduplication/compression settings). These policies are then assigned to individual VMs or even specific VMDKs, automating storage provisioning and ensuring adherence to service level agreements. This dramatically simplifies storage management compared to traditional LUN-based approaches.

- Scalability and Resilience: vSAN scales by simply adding more ESXi hosts (nodes) to the cluster, increasing both compute and storage capacity. It supports various failure tolerance methods, including replication and erasure coding, and features like Stretched Clusters for disaster recovery across geographically separated sites, providing zero RPO/RTO for critical applications.

- Ecosystem Integration: vSAN’s primary strength lies in its seamless compatibility with the entire VMware ecosystem, including vSphere, vCenter (for unified management), NSX (for network virtualization and micro-segmentation), and the vRealize Suite (for automation and operations management). This makes it a natural choice for organizations heavily invested in VMware technologies.

- Hardware Flexibility: vSAN offers flexibility in hardware choice, supporting a wide range of certified hardware configurations (vSAN ReadyNodes) from various vendors, allowing organizations to choose their preferred hardware platform.

3.3 HPE SimpliVity: Data Efficiency and Integrated Data Protection

HPE SimpliVity offers an HCI solution distinguished by its strong emphasis on data efficiency, built-in data protection, and a unique hardware acceleration component.

- Core Technology: SimpliVity’s differentiator is its OmniStack Data Virtualization Platform, which is implemented via a combination of software and a dedicated hardware accelerator card (an FPGA-based OmniStack Accelerator Card) within each node. This card performs always-on, inline deduplication, compression, and optimization of all data at the source (globally and across clusters), before it hits disk. This results in significant storage savings and improved I/O performance.

- Integrated Backup and Disaster Recovery: SimpliVity is renowned for its comprehensive, built-in data protection capabilities. It offers integrated backup (eliminating the need for separate backup software and hardware), rapid local restore, and highly efficient, bandwidth-optimized replication for disaster recovery. VMs can be restored in seconds to minutes, and the global deduplication ensures extremely low bandwidth requirements for replication across sites.

- Global Federated Management: The solution provides a unified management experience across multiple sites and clusters through a single pane of glass (integrated with vCenter or Hyper-V manager). This makes it particularly attractive for Remote Office/Branch Office (ROBO) deployments where centralized management of distributed infrastructure is crucial.

- Scalability: SimpliVity scales by adding nodes to the cluster, allowing for independent scaling of compute and storage resources. The architecture ensures high availability through data redundancy across nodes.

- Focus on Efficiency: HPE SimpliVity often guarantees a data efficiency ratio (e.g., 10:1), which can significantly reduce storage capacity requirements and associated costs, including backup storage and network bandwidth for replication.

3.4 Dell EMC VxRail: Integrated Appliance for VMware Environments

Dell EMC VxRail is an HCI appliance developed in a joint engineering effort between Dell EMC and VMware. It offers a fully integrated, pre-configured, and pre-tested HCI solution specifically designed for VMware environments.

- Core Technology: VxRail appliances combine Dell EMC PowerEdge servers with VMware’s leading software components: vSphere (for compute virtualization) and vSAN (for software-defined storage). This tight integration ensures seamless compatibility and optimized performance.

- Automated Lifecycle Management: A key advantage of VxRail is its automated lifecycle management. The VxRail Manager software simplifies deployment, patching, and upgrades of the entire stack (ESXi, vSAN, firmware, drivers) through automated, non-disruptive processes. This significantly reduces administrative overhead and minimizes the risk of human error during maintenance operations.

- Seamless VMware Integration: VxRail offers the deepest possible integration with the VMware ecosystem. It is designed to work out-of-the-box with vCenter for unified management, and can easily integrate with other VMware solutions like NSX, vRealize Suite, and Cloud Foundation.

- Scalability and Configuration: VxRail supports scaling by adding nodes to the cluster, from small two-node configurations to large clusters of 64+ nodes. It comes in various configurations, including all-flash and hybrid options, to meet diverse performance and capacity requirements. It also offers specialized nodes for compute-intensive (E Series), graphics-intensive (V Series), and storage-intensive (P Series) workloads.

- Global Support: As a joint solution from two industry giants, VxRail benefits from unified, single-call support from Dell EMC, simplifying problem resolution for customers.

3.5 Other Notable HCI Players

While the above four vendors dominate much of the HCI market, several other companies offer compelling solutions:

- Cisco HyperFlex: Built on Cisco UCS servers and featuring the HX Data Platform (based on Springpath acquisition), HyperFlex integrates deeply with Cisco’s networking infrastructure and is managed via Cisco Intersight, their SaaS-based cloud operations platform. It’s known for strong network integration and performance.

- Scale Computing HC3: Focuses on simplicity, resilience, and ease of use, particularly for edge computing and ROBO environments. It uses its own KVM-based hypervisor and a self-healing architecture that simplifies deployment and management for organizations with limited IT staff.

- Microsoft Azure Stack HCI: This solution brings Azure services and management to the edge or on-premises data center. It leverages Windows Server and Storage Spaces Direct (S2D) to create hyper-converged clusters, managed through Windows Admin Center and integrated with Azure Arc for hybrid cloud capabilities.

Each vendor brings a unique blend of features, integration capabilities, and market focus, allowing organizations to select an HCI solution that best aligns with their specific technical requirements, existing IT ecosystem, and business objectives.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

4. Implementation Strategies for Hyper-Converged Infrastructure: A Phased Approach

Successful implementation of HCI requires meticulous planning, a thorough understanding of organizational needs, and a phased execution strategy. Unlike traditional infrastructure, HCI deployments often necessitate a re-evaluation of existing operational processes and skill sets.

4.1 Comprehensive Assessment of Organizational Needs and Infrastructure Readiness

The initial phase is critical and involves a detailed assessment to ensure the HCI solution aligns with business goals and technical requirements.

- Workload Analysis and Profiling: This is paramount. Organizations must thoroughly analyze their existing and future workload demands. This includes:

- I/O Characteristics: Understanding IOPS, latency, and throughput requirements for critical applications (e.g., databases, VDI, analytics platforms). Identify ‘noisy neighbors’ and highly sensitive workloads.

- Compute Requirements: CPU core count, clock speed, and RAM per VM.

- Storage Capacity: Current and projected data growth, including requirements for deduplication, compression, and long-term retention.

- Network Dependencies: Inter-application communication, bandwidth between sites, and latency tolerance.

- Existing Infrastructure Audit: Evaluate the current state of servers, storage arrays, network switches, and virtualization platforms. Identify components that can be integrated with the new HCI environment (e.g., existing network fabric, backup solutions) and those that are candidates for replacement. Assess software licenses that may be reused or retired.

- Total Cost of Ownership (TCO) Analysis: Conduct a detailed TCO comparison between HCI and traditional infrastructure. This should encompass:

- Capital Expenditure (CapEx): Initial hardware acquisition (servers, storage, networking components), software licenses.

- Operational Expenditure (OpEx): Power, cooling, data center rack space, maintenance contracts, administrative labor costs (including reduced troubleshooting time), and potential savings from simplified backup/DR.

- Skill Set Assessment and Training Needs: Evaluate the IT team’s existing expertise in virtualization, storage, and networking. HCI simplifies many aspects, but new skills may be required for optimal management and troubleshooting of the converged stack. Plan for necessary training programs.

- Network Readiness Assessment: HCI relies heavily on robust network connectivity between nodes. Ensure the existing network infrastructure (switches, cabling) supports the required bandwidth (e.g., 10GbE or 25GbE+ per node), low latency, and proper VLAN segmentation. QoS settings might be necessary for specific workloads to ensure performance.

4.2 Pilot Deployment: Validation and Optimization

Before committing to a full-scale deployment, a pilot project or proof-of-concept (PoC) is highly recommended. This allows for validation in a controlled environment.

- Define Scope and Success Metrics: Clearly outline the objectives of the pilot. This might involve migrating a small, non-critical workload or deploying a specific new application. Define measurable success metrics, such as performance benchmarks, ease of management, resource utilization, and successful integration with existing systems.

- Test Compatibility and Integration: Verify that the chosen HCI solution integrates seamlessly with existing applications, operating systems, management tools (e.g., monitoring, backup), and security policies. Test specific functionalities like snapshot recovery, data replication, and high availability failovers.

- Evaluate Performance Under Real-World Conditions: Subject the pilot deployment to realistic workload simulations. Monitor key performance indicators (KPIs) like IOPS, latency, CPU utilization, and memory consumption to ensure the HCI system meets or exceeds performance expectations.

- Gather Feedback: Solicit feedback from IT administrators, application owners, and end-users involved in the pilot. This qualitative feedback is invaluable for identifying unforeseen challenges and refining the deployment strategy.

- Refine Configuration: Based on pilot results, make necessary adjustments to the HCI configuration, resource allocation, and networking settings to optimize for production workloads.

4.3 Full-Scale Deployment: Phased Migration and Operational Transition

Once the pilot is successful and validated, organizations can proceed with the full-scale deployment, ideally through a phased rollout to manage risk and ensure a smooth transition.

- Phased Rollout Strategy: Instead of a ‘big bang’ approach, adopt a phased migration plan. This could involve:

- Workload Prioritization: Migrating less critical applications first, followed by moderately critical ones, and finally the most business-critical applications.

- Departmental Rollout: Deploying HCI for one department or business unit at a time.

- Greenfield vs. Brownfield: Deciding whether to deploy HCI for new applications (greenfield) or migrate existing workloads (brownfield). Brownfield migrations require careful planning for data transfer and application cutovers.

- Data Migration Strategies: Plan how data will be moved from legacy storage to the HCI platform. Options include ‘lift-and-shift’ (P2V or V2V conversion tools), application-level migration (e.g., database backups/restores), or using native HCI migration tools if available.

- Network Configuration and Security: Implement the designed network topology, configure VLANs, ensure proper firewall rules, and establish micro-segmentation policies where applicable to enhance security within the HCI environment.

- Integration with IT Operations Management (ITOM) Tools: Integrate the HCI management platform with existing monitoring, alerting, backup, and disaster recovery solutions to maintain a holistic view of the IT environment.

- Staff Training and Skill Development: Provide comprehensive training for IT staff responsible for managing the HCI environment. This includes not just technical skills but also new operational workflows and troubleshooting methodologies.

- Establish Support Mechanisms: Define clear escalation paths, vendor support contacts, and internal knowledge bases to address any issues post-deployment swiftly and efficiently.

- Continuous Optimization: Post-deployment, regularly monitor performance, resource utilization, and security posture. Leverage the HCI platform’s analytics capabilities to identify areas for optimization and ensure the infrastructure continues to meet evolving business needs.

By following these structured implementation strategies, organizations can maximize the value derived from their HCI investment, ensuring a seamless transition and sustained operational excellence.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

5. Benefits of Hyper-Converged Infrastructure Across Diverse Workloads

Hyper-Converged Infrastructure delivers a compelling array of benefits that collectively transform data center operations, making it an ideal platform for a broad spectrum of enterprise workloads. These advantages extend beyond mere technical specifications to significantly impact an organization’s financial health, operational agility, and competitive posture.

5.1 Substantial Cost Savings

HCI’s design inherently drives cost efficiencies, both in capital expenditure (CapEx) and operational expenditure (OpEx).

-

Reduced Capital Expenditure (CapEx):

- Hardware Consolidation: By integrating compute, storage, and networking into a single commodity x86 server footprint, HCI significantly reduces the need for discrete, expensive storage arrays (SAN/NAS), Fibre Channel switches, and often dedicated backup hardware. This leads to lower upfront hardware costs.

- Simplified Procurement: Purchasing infrastructure becomes simpler, often involving fewer vendors and consolidated purchase orders, streamlining the procurement process and potentially unlocking volume discounts.

- Modular Scaling: The ‘pay-as-you-grow’ node-based expansion model means organizations only invest in the resources they need, when they need them, avoiding over-provisioning and large, infrequent capital outlays associated with traditional forklift upgrades.

-

Operational Efficiency (OpEx) Leading to Savings:

- Reduced Rack Space, Power, and Cooling: Consolidating hardware onto fewer, denser nodes significantly decreases the physical footprint in the data center, leading to substantial savings on real estate, electricity consumption, and cooling infrastructure.

- Lower Administrative Overhead: The unified management platform and extensive automation capabilities drastically reduce the time and effort IT staff spend on routine tasks like provisioning, patching, troubleshooting, and maintenance. This allows IT teams to be more productive and focus on strategic initiatives rather than reactive fire-fighting.

- Simplified Maintenance and Support: With fewer components and often a single vendor for support (or a unified support model for joint solutions), maintenance contracts and troubleshooting efforts are streamlined, reducing associated costs and downtime.

5.2 Enhanced Operational Efficiency

Beyond direct cost savings, HCI fundamentally improves the efficiency of IT operations.

- Simplified Management (Single Pane of Glass): The most palpable operational benefit is the unified management interface. Administrators no longer toggle between disparate tools for servers, storage, and networking. This single pane of glass simplifies monitoring, configuration, and troubleshooting, reducing complexity and the potential for human error.

- Accelerated Provisioning: With automation and software-defined capabilities, provisioning new VMs or expanding resources that once took days or weeks in traditional environments can now be accomplished in minutes. This agility directly supports business responsiveness and faster time-to-market for new applications and services.

- Improved Resource Utilization: HCI’s ability to dynamically allocate and reallocate compute, storage, and network resources ensures that workloads receive precisely what they need, when they need it. Intelligent algorithms can balance resources across the cluster, preventing resource contention and optimizing overall system performance, leading to higher utilization rates of expensive hardware.

- Self-Healing and Proactive Management: Many HCI solutions incorporate self-healing capabilities, automatically rebalancing data and workloads in the event of a node or disk failure. Advanced analytics and AIOps features can predict potential issues, provide recommendations for optimization, and automate routine tasks, moving IT operations from reactive to proactive.

- Streamlined Updates and Upgrades: HCI platforms typically offer non-disruptive, one-click or automated upgrades for the entire software stack (hypervisor, storage fabric, firmware), significantly reducing maintenance windows and minimizing service disruption.

5.3 Agility and Performance Across Various Workloads

HCI’s inherent flexibility, scalability, and performance characteristics make it exceptionally well-suited for a diverse range of enterprise workloads.

-

Virtual Desktop Infrastructure (VDI): HCI is often considered the ‘killer app’ for VDI. Its advantages include:

- Boot Storm Resilience: The distributed storage and flash optimization of HCI nodes effectively mitigate ‘boot storms’ (when many desktops power on simultaneously) and ‘login storms’, ensuring a consistent and responsive user experience.

- Simplified Scaling: As the number of VDI users fluctuates, HCI allows for incremental scaling of resources, easily adding nodes to accommodate more desktops.

- Storage Efficiency: Native deduplication and compression are highly effective for VDI environments, where many virtual desktops share common operating system and application files, drastically reducing storage footprint.

- Simplified Management: Centralized management of VDI desktops and underlying infrastructure reduces administrative burden.

-

Database Management (OLTP/OLAP): Modern HCI platforms are increasingly capable of supporting demanding database workloads.

- High Performance I/O: All-flash or hybrid HCI configurations, combined with data locality and optimized I/O paths, deliver the low latency and high IOPS required by transactional (OLTP) databases.

- High Availability and Data Protection: Built-in replication, snapshots, and automatic failover ensure databases remain continuously available and protected against hardware failures, minimizing recovery point objectives (RPO) and recovery time objectives (RTO).

- Scalability: As databases grow, HCI allows for seamless scaling of compute and storage resources without disrupting operations.

-

Remote Office/Branch Office (ROBO) Deployments: HCI is an ideal fit for ROBO scenarios due to its simplicity and integrated nature.

- Simplified Deployment and Management: ROBO sites often have limited or no dedicated IT staff. HCI’s ‘rack-it-and-go’ deployment, ease of management from a central location, and minimal local configuration requirements are highly advantageous.

- Cost-Effectiveness: Reduced hardware footprint, lower power consumption, and integrated data protection mean lower overall costs for remote sites.

- Integrated Data Protection: Native backup and disaster recovery capabilities ensure data at remote sites is protected and can be easily replicated back to a central data center or cloud.

-

Cloud Integration and Hybrid Cloud Strategies: HCI serves as a strong foundation for hybrid cloud models.

- Consistent Platform: It provides a consistent operational environment between on-premises and public cloud (e.g., Nutanix Clusters on AWS/Azure, Azure Stack HCI).

- Disaster Recovery to Cloud: Many HCI solutions offer integrated replication to public cloud storage or DR-as-a-Service, providing cost-effective and agile disaster recovery options.

-

DevOps and Test/Development Environments: The speed and flexibility of HCI are invaluable for development teams.

- Rapid Provisioning: Developers can rapidly provision new environments, test beds, and application instances, accelerating software development lifecycles.

- Efficient Cloning: Space-efficient snapshots and clones allow for instant creation of multiple development and testing environments without consuming excessive storage.

-

Edge Computing: With the proliferation of IoT devices and the need for real-time data processing at the source, HCI is emerging as a preferred solution for edge deployments.

- Small Footprint & Resilience: Single or two-node HCI clusters offer enterprise-grade capabilities in a compact, rugged form factor, ideal for locations with limited space and environmental controls.

- Remote Manageability: Centralized management capabilities allow edge HCI deployments to be managed remotely from a core data center, minimizing on-site IT requirements.

In essence, HCI provides a robust, adaptable, and economically viable infrastructure capable of meeting the diverse and evolving demands of modern enterprise IT, from traditional data center workloads to emerging edge and hybrid cloud use cases.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

6. Emerging Trends and Future Outlook of HCI

The HCI market continues to evolve rapidly, driven by technological advancements and shifting enterprise demands. Several key trends are shaping its future:

-

HCI and Kubernetes/Containers: As organizations increasingly adopt containerization (e.g., Docker, Kubernetes) for microservices architectures, HCI is becoming a favored platform. HCI’s distributed storage and simplified management provide an excellent foundation for container orchestration platforms, offering persistent storage for stateful applications and reducing infrastructure complexity for DevOps teams. Vendors are enhancing their HCI platforms with native Kubernetes integration, enabling ‘Kubernetes-as-a-Service’ or ‘Containers-as-a-Service’ capabilities.

-

AI/ML Workloads on HCI: The growing demand for Artificial Intelligence and Machine Learning workloads, which are often GPU-intensive and require high-performance storage, is influencing HCI development. Vendors are now offering HCI nodes with integrated GPUs, optimized for data science and AI applications, demonstrating HCI’s ability to handle even the most demanding computational tasks.

-

HCI at the Edge: The proliferation of IoT devices and the need for real-time data processing at the source are driving the expansion of HCI into edge computing environments. Single-node or compact two-node HCI clusters provide enterprise-grade reliability, security, and remote manageability for factories, retail stores, remote oil rigs, and other distributed locations where traditional IT infrastructure is impractical.

-

HCI as the Foundation for Hybrid and Multi-Cloud: HCI is increasingly viewed as the optimal on-premises anchor for hybrid and multi-cloud strategies. By offering a consistent operational model and API set between on-premises and public cloud environments, HCI platforms facilitate seamless workload mobility, disaster recovery, and data synchronization across diverse cloud landscapes, bridging the gap between traditional data centers and hyperscale clouds.

-

Consumption Models (HCI-as-a-Service): The shift towards ‘as-a-Service’ consumption models is impacting HCI. Vendors are offering HCI infrastructure through flexible subscription-based models (e.g., HPE GreenLake, Dell APEX, Nutanix Consumption-based IT). This allows organizations to consume HCI resources on demand, paying for what they use, further aligning IT costs with business value and offering cloud-like agility on-premises.

-

Enhanced Security Features: With increasing cyber threats, HCI platforms are integrating advanced security capabilities such as micro-segmentation, immutable infrastructure, ransomware protection, and deeper integration with security information and event management (SIEM) systems, providing a more resilient and secure foundation for enterprise applications.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

7. Challenges and Considerations for HCI Adoption

While Hyper-Converged Infrastructure offers numerous advantages, organizations should also be aware of potential challenges and considerations during adoption and operation:

-

Initial Sizing Complexity: Properly sizing an HCI cluster requires a thorough understanding of workload requirements, including CPU, RAM, and most critically, storage I/O profiles. Under-sizing can lead to performance bottlenecks, while over-sizing can negate cost benefits. While vendors offer sizing tools, accurate data collection from existing environments is crucial.

-

Network Design Importance: Although HCI simplifies many aspects of networking, a robust and well-designed physical network (switches, cabling) is paramount. High-bandwidth, low-latency interconnects between HCI nodes are essential for optimal performance of the distributed storage fabric. Poor network design can become a significant bottleneck.

-

Vendor Lock-in (Perceived vs. Actual): Some critics argue that HCI can lead to vendor lock-in due to the integrated nature of the solution. However, leading vendors mitigate this through multi-hypervisor support (Nutanix), hardware flexibility (vSAN), and open APIs. The ‘lock-in’ is often at the software layer rather than the hardware, which is a different dynamic than traditional integrated stacks.

-

Compute-Storage Ratio: In a fully hyper-converged model, compute and storage scale together. If an organization has a disproportionate need for only compute or only storage, it might lead to over-provisioning of the other resource. Modern HCI solutions, however, offer more flexible scaling options (e.g., compute-only nodes, storage-heavy nodes) to address this.

-

Operational Mindset Shift: Adopting HCI requires a shift in IT operational mindset. Teams accustomed to managing separate compute, storage, and network silos will need to embrace a more holistic, software-defined approach. This requires training and potentially reorganization of IT teams to leverage the benefits of a converged infrastructure fully.

-

Cost of Entry for Small Scale: For very small deployments (e.g., one or two VMs), the initial investment in a multi-node HCI cluster might appear higher than a single traditional server. However, this is often offset by reduced operational complexity, better scalability for future growth, and inherent high availability.

Addressing these considerations through careful planning, thorough assessment, and appropriate training can help organizations successfully navigate their HCI journey and unlock its full potential.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

8. Conclusion

Hyper-Converged Infrastructure stands as a significant advancement in data center architecture, moving beyond the inherent complexities and inefficiencies of traditional siloed systems. By seamlessly integrating compute, storage, and networking into a unified, software-defined platform, HCI offers a compelling solution for modern IT environments grappling with increasing data volumes, dynamic workload demands, and the imperative for greater operational agility.

The detailed examination of HCI’s architectural principles—including the pivotal roles of software-defined storage, distributed data planes, and advanced virtualization—reveals a robust, scalable, and resilient foundation. The comparative analysis of leading vendor solutions from Nutanix, VMware, HPE, and Dell EMC underscores the market’s innovation, offering diverse approaches to meet varied enterprise requirements while consistently delivering on the promise of simplification and performance.

Effective implementation strategies, from rigorous needs assessment and pilot deployments to phased migration and continuous optimization, are crucial for realizing HCI’s full potential. When deployed thoughtfully, HCI confers substantial benefits: driving significant cost savings through hardware consolidation and operational efficiencies, enhancing IT agility through accelerated provisioning and simplified management, and providing a high-performance, resilient platform for a broad array of workloads, including VDI, demanding databases, and distributed ROBO and edge computing sites.

As organizations navigate the complexities of digital transformation and embrace hybrid and multi-cloud strategies, HCI is increasingly positioning itself not merely as an alternative, but as the foundational infrastructure. Its ongoing evolution, marked by deeper integration with containers, support for AI/ML, and flexible consumption models, ensures its continued relevance. For IT leaders seeking to modernize their data center operations, reduce operational overhead, and empower their businesses with flexible, high-performing infrastructure, a thorough understanding and strategic adoption of Hyper-Converged Infrastructure is no longer an option, but a strategic imperative.

Many thanks to our sponsor Esdebe who helped us prepare this research report.

The point about vendor lock-in is interesting, particularly the shift from hardware to software. As HCI adoption grows, how do you see the industry addressing concerns around data portability and interoperability between different HCI platforms?

That’s a great question! The industry seems to be moving towards more open APIs and standardized data formats to improve data portability. There’s also growing interest in solutions that provide a vendor-neutral layer for managing data across multiple HCI platforms, which could really help address interoperability concerns as adoption increases. What are your thoughts on the role of open source in this space?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The discussion on consumption models is particularly relevant. How do you see the balance shifting between on-premise HCI and cloud-based HCIaaS offerings, especially concerning data sovereignty and compliance requirements for specific industries?

Thanks for highlighting the consumption models! Data sovereignty is indeed key. I believe we’ll see a rise in hybrid HCIaaS solutions, offering the flexibility of cloud with on-prem control. Industries with strict compliance will likely favor on-prem or hybrid models that provide the necessary data governance.

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The discussion around consumption models is timely. The report mentions HCI as a service. How do you see the pricing and metering evolving to accommodate diverse workload requirements and ensure cost predictability for organizations adopting these models?

That’s a great question! I envision pricing becoming more granular, moving beyond simple vCPU/GB metrics to incorporate application-specific performance tiers. Metering will need to become more sophisticated, potentially leveraging AI to dynamically adjust resource allocation and optimize costs based on real-time workload demands, ensuring both efficiency and predictable budgeting.

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The discussion around workload analysis and profiling is insightful. Could we elaborate on the role of AI-driven predictive analytics in optimizing resource allocation within HCI environments, especially considering the diverse I/O characteristics of modern applications?