The Ultimate Guide to Data Backup: Building an Unshakeable Digital Fortress

In today’s dizzying digital age, data isn’t just important; it’s the very bedrock of modern existence. From the sprawling enterprises managing petabytes of customer information to the solopreneur meticulously crafting their digital portfolio, our reliance on data has never been more profound. Just imagine a sudden, catastrophic loss: years of financial records vanishing into the digital ether, or those precious family photos, irreplaceable memories, simply gone. The implications, both personal and professional, are truly terrifying.

Data loss isn’t just an inconvenience; it can be a business-ending event, a reputational nightmare, and a deeply emotional blow. So, it’s not just about ‘having backups,’ you see, it’s about implementing a robust, thoughtful strategy that acts as your ultimate safety net. A well-designed data backup plan is your shield against the unforeseen, your recovery path when disaster strikes, ensuring your information remains secure and, crucially, recoverable. Let’s delve deep into the best practices that’ll help you forge this digital fortress.

1. The Unshakeable Foundation: Embracing the 3-2-1 Backup Rule

This rule, you might have heard of it, is a golden standard in data protection, and honestly, if you’re not following it, you’re playing a pretty risky game. It’s elegantly simple, yet incredibly powerful, forming the bedrock of any resilient backup strategy. Let’s really dig into what those numbers mean and why each piece is absolutely crucial.

Three Copies of Your Data: Redundancy is Your Friend

It seems almost excessive to some, doesn’t it? But think about it this way: one copy is your live, working data, the files you’re interacting with every single day. The other two are your safety nets. If one backup fails – and trust me, backups do fail sometimes, whether it’s a corrupted drive or a software glitch – you’ve got another one ready to go. It’s like having a spare tire, then another spare tire for your spare.

When I first started out in IT, I witnessed a small agency lose a whole quarter’s worth of client data because their single external hard drive backup just… died. Poof! No warning. That experience really hammered home why redundancy isn’t a luxury, it’s a necessity. You need your original data plus two distinct backups, giving you that critical cushion against unforeseen problems.

Two Different Media Types: Diversify Your Storage

This part is often overlooked, but it’s super important. Storing all your backups on the same type of media, say, all on external hard drives, leaves you vulnerable to a common failure point. Imagine a power surge fries your primary server, and because all your external drives were plugged into the same power strip, they’re gone too. Disaster! By diversifying your media, you’re protecting against this.

What does that mean in practice? Well, maybe you keep one backup on an external hard drive (a physical, tangible piece of hardware), and another safely nestled in the cloud (a remote, internet-based solution). Other options include network-attached storage (NAS) devices, tape drives for larger, archival needs, or even solid-state drives (SSDs) for faster, more durable local copies. The beauty is in the mix; each media type has its own failure characteristics, so combining them drastically reduces the chance of simultaneous catastrophic loss.

One Offsite Copy: Your Disaster Recovery Ace

This, my friends, is the game-changer, the ultimate insurance policy against the truly unexpected. A fire, a flood, a robbery, or even just a localized power outage could render all your local backups useless, couldn’t it? That’s where the offsite copy swoops in like a superhero. It ensures that even if your entire primary location is wiped out, your critical data remains safe and sound, accessible from somewhere else entirely.

For businesses, this might mean replicating data to a geographically distant data center or utilizing robust cloud backup services. For individuals, it could be as simple as syncing your files to Google Drive, Dropbox, or storing an external drive at a friend’s house or in a bank’s safety deposit box. The key is physical separation. If something catastrophic happens at your main site, your data isn’t going down with the ship. It’s a simple concept, really, but one that so many people neglect until it’s too late.

2. Automate, Don’t Hesitate: The Power of Set-and-Forget Backups

Let’s be brutally honest for a moment: manual backups are a chore. We’ve all been there, haven’t we? That little voice in your head saying ‘you really should back up those files’ that you promptly ignore until a week later, something goes wrong. Human nature dictates that if something is repetitive and requires conscious effort, it’s prone to being forgotten, postponed, or done incorrectly. That’s why automation isn’t just a convenience; it’s a critical component of a reliable data protection strategy.

Think of automation as your ever-vigilant digital assistant, tirelessly working in the background, ensuring your data is secured without you lifting a finger. By setting up scheduled backups, you’re essentially programming a system to perform these vital tasks consistently, whether it’s daily, hourly, or even in real-time for highly critical data. This dramatically reduces the risk of human error, the leading culprit in many data loss scenarios. You eliminate the ‘did I remember to back up yesterday?’ anxiety, replacing it with the peace of mind that comes from knowing your systems are diligently protecting your information around the clock.

Modern backup software, whether for individual machines or complex corporate servers, offers incredible flexibility in scheduling. You can configure backups to run during off-peak hours, minimizing any potential impact on system performance. Furthermore, many solutions provide detailed logs and notifications, so while the process is automated, you’re never completely out of the loop. If a backup fails, or an anomaly is detected, you’ll be alerted, allowing you to address issues proactively. This ‘set it and forget it’ capability, combined with intelligent monitoring, truly transforms backup from a burdensome task into a seamless, reliable process.

3. Smarter, Not Harder: Harnessing Incremental and Differential Backups

When we talk about backups, it’s easy to just think ‘copy everything.’ But for large datasets, or for systems that change frequently, copying absolutely everything every single time can be incredibly inefficient, consuming vast amounts of storage and bandwidth. That’s where more nuanced backup strategies like incremental and differential come into play, offering a much smarter approach.

-

Full Backups: This is your baseline, a complete snapshot of all the data you want to protect at a specific moment. It’s comprehensive, easy to restore from (since everything’s in one place), but also the most time-consuming and storage-intensive option. You can’t avoid full backups entirely; they typically form the starting point of any backup sequence.

-

Incremental Backups: These are the real efficiency champions. After an initial full backup, an incremental backup only saves the changes that have occurred since the last backup, regardless of whether that last backup was full or another incremental one. Imagine a library: the full backup is every book. The first incremental backup just adds the new books acquired since that full backup. The second incremental only adds books acquired since the first incremental. This method is incredibly fast and uses minimal storage because you’re only archiving small chunks of new or modified data. The catch? To restore a full system, you need the original full backup plus every subsequent incremental backup in the correct order. If even one incremental file is missing or corrupted, your entire recovery chain might break. This makes incremental backups powerful but requires robust management and careful integrity checks.

-

Differential Backups: Sitting somewhere between full and incremental, differential backups record all changes made since the last full backup. Let’s go back to our library: after the initial full backup (all books), the first differential backup contains all books acquired since that full backup. The second differential backup also contains all books acquired since the same original full backup, effectively overwriting the previous differential with an expanded set of changes. This means you only need the last full backup and the most recent differential backup to restore your data, which simplifies the restoration process compared to incrementals. However, differentials naturally grow larger over time until the next full backup is performed, making them less storage-efficient than incrementals but more reliable for recovery.

Choosing the right strategy often involves a hybrid approach. Many organizations implement a daily incremental backup schedule, perhaps with weekly differential backups, all anchored by a monthly or quarterly full backup. This balances speed, storage efficiency, and recovery simplicity. Understanding these nuances allows you to design a backup architecture that aligns perfectly with your data’s change rate and your recovery time objectives.

4. The Proof is in the Pudding: Why You Must Regularly Test Your Backups

This is probably the single most critical, yet often overlooked, best practice. Seriously. I’ve seen countless instances where businesses diligently backed up their data for years, only to find when a disaster struck, their backups were useless. It’s a gut-wrenching realization, akin to finding your parachute is full of holes just as you’re jumping out of the plane. A backup that can’t be restored successfully isn’t a backup; it’s a false sense of security.

Think of it this way: your backup system is essentially a fire extinguisher. You hope you never have to use it, but when you do, it has to work, right? You wouldn’t just buy an extinguisher and assume it’s good; you’d check its pressure gauge, wouldn’t you? Backups are no different. You absolutely must regularly test them.

So, how do you go about this? It’s more than just checking a log that says ‘backup successful.’

- Spot Checks: Periodically, pick a random file or folder from a recent backup and try to restore it to an alternate location. Can you open it? Is it intact? This simple test can reveal a lot about file corruption or permission issues.

- Full System Restores (Drill): For critical systems, you should ideally perform a full ‘bare metal’ restore to a separate, isolated environment. This is your ultimate test. Can you rebuild your entire server or application from your backup? Does it function as expected? This type of drill helps identify issues with the backup image itself, configuration settings, and even your documentation of the recovery process. It’s a vital part of your overall Disaster Recovery (DR) plan, giving you confidence when the real crisis hits.

- Verify Integrity: Many backup solutions offer built-in integrity checks that can scan your backup files for corruption. While not a substitute for an actual restore, these are useful as an ongoing health check.

The frequency of testing depends on your data’s criticality and your Recovery Point Objective (RPO) and Recovery Time Objective (RTO). For highly critical data, quarterly or even monthly restore tests aren’t unreasonable. For less critical data, perhaps twice a year. Document your tests, record any failures, and promptly address them. This proactive approach saves immense pain and financial loss down the line. Remember, it’s not if you’ll need to restore, but when. And when that day comes, you’ll want to be absolutely certain your safety net will catch you.

5. Lock It Down: The Imperative of Encrypting Your Backups

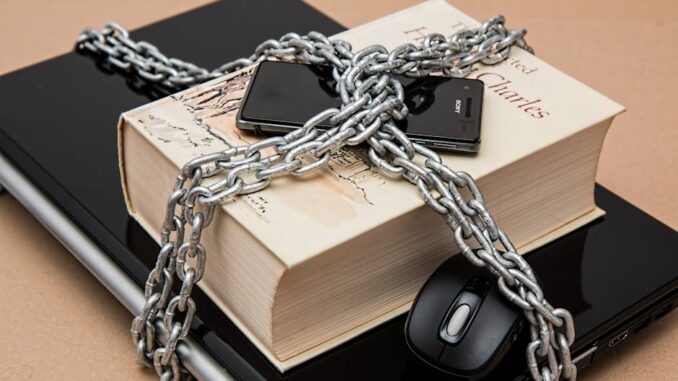

In an era where data breaches are practically daily news, leaving your backups unencrypted is like leaving your vault door wide open. It’s an invitation for trouble, plain and simple. Even if your primary data is heavily secured, an unencrypted backup stored on an external drive or in the cloud presents a massive vulnerability. Imagine that external drive being stolen, or an unauthorized person gaining access to your cloud storage. Without encryption, all your sensitive information is immediately exposed.

Encryption is your digital padlock, transforming your data into an unreadable jumble of characters for anyone without the correct decryption key. It’s not just about protecting against malicious actors, though that’s a huge part of it. It’s also about compliance. Many industry regulations (like HIPAA, GDPR, PCI DSS) and legal frameworks mandate encryption for sensitive data, both ‘at rest’ (when it’s stored) and ‘in transit’ (when it’s being moved). Failing to encrypt could lead to hefty fines and severe reputational damage.

So, what are your options?

- Software-based Encryption: Many modern backup software solutions offer robust encryption options. You typically set a strong passphrase or use a key file, and the software handles the encryption and decryption automatically during backup and restore processes.

- Hardware-based Encryption: Devices like Lexar Secure Storage Solutions, or self-encrypting drives (SEDs), offer encryption at the hardware level. This can sometimes provide better performance and a stronger security posture because the encryption is handled by a dedicated chip, independent of the operating system.

- Cloud Encryption: If you’re using cloud storage for your offsite backups, ensure that the provider offers encryption both for data in transit (using protocols like SSL/TLS) and data at rest (server-side encryption). Better yet, consider client-side encryption, where you encrypt the data before it leaves your system, ensuring only you hold the keys.

The most crucial thing with encryption is key management. Lose your encryption key or passphrase, and your data is gone forever, even you won’t be able to retrieve it. So, store your keys securely, perhaps in a separate, encrypted password manager or a secure physical location. Don’t write it on a sticky note under your keyboard, please! Encryption adds that vital layer of security, making your backups a fortress rather than an open invitation.

6. Who Gets the Keys? Implementing Strong Access Controls for Backups

It doesn’t matter how great your backup strategy is if the wrong people can mess with it. This is where strong access controls come into play, essentially defining who can do what with your backup systems and data. It’s about putting boundaries in place, ensuring that only authorized individuals have the permissions necessary to initiate, modify, or restore backups.

Think about a secure facility. Not everyone has a master key, do they? Different employees have access to different areas based on their job functions. The same principle applies to your digital assets. Implementing Role-Based Access Control (RBAC) is a cornerstone here. Instead of granting individual permissions to every user, you define roles (e.g., ‘Backup Administrator,’ ‘Help Desk Support,’ ‘Data Owner’), and then assign permissions to those roles. Users are then assigned to the appropriate roles. This simplifies management and significantly reduces the risk of accidental or malicious data loss.

Consider these aspects:

- Principle of Least Privilege: This is foundational. Users and systems should only be granted the minimum level of access required to perform their specific tasks, and no more. A help desk technician, for example, might need permission to initiate a single file restore for a user, but they shouldn’t have the ability to delete entire backup sets or modify backup schedules.

- Segregation of Duties: Where possible, separate the responsibilities for backup administration from other critical IT functions. This prevents a single individual from having too much power or inadvertently creating a single point of failure.

- Regular Review: Access permissions aren’t static. People change roles, leave the company, or their responsibilities evolve. Regularly review and audit who has access to your backup systems and ensure those permissions are still appropriate. Stale accounts or over-privileged users are prime targets for attackers.

By meticulously managing access, you’re not just preventing unauthorized alterations; you’re also significantly reducing the internal risk of human error. It’s another layer in your data defense, making your backups more resilient against both external threats and internal mistakes.

7. Beyond the Walls: Why Offsite Backup Storage is Non-Negotiable

We touched upon this in the 3-2-1 rule, but it bears repeating with much more emphasis: an offsite copy is not a nice-to-have, it’s an absolute necessity. Imagine a fire engulfs your office building, or a natural disaster like a hurricane or flood renders your entire physical location unusable. If all your backups, even your ‘local’ redundant ones, are stored within that same physical perimeter, they’re gone too. Your entire business could cease to exist. That’s a terrifying thought, isn’t it?

Offsite storage provides that critical geographical separation, acting as your ultimate safeguard against site-specific disasters. It ensures that even if your primary site is completely compromised, destroyed, or inaccessible, your data remains safe and sound, ready for recovery from an entirely different location.

So, what are the practical options for achieving this essential separation?

- Cloud Backup Solutions: For many, especially smaller businesses and individuals, cloud storage is the most convenient and cost-effective offsite solution. Services like AWS S3, Microsoft Azure Blob Storage, Google Cloud Storage, Backblaze, or dedicated backup-as-a-service (BaaS) providers automatically replicate your data to geographically dispersed data centers. This offers incredible resilience and accessibility, often with built-in encryption and scalability.

- Dedicated Disaster Recovery (DR) Sites: Larger enterprises might opt for a dedicated secondary data center located hundreds or even thousands of miles away from their primary site. This allows for near real-time replication of data and even entire system failover in the event of a primary site failure, offering the lowest RTOs.

- Physical Media Transport: For organizations with extremely large datasets or specific compliance requirements, physically transporting encrypted hard drives or tape cartridges to a secure offsite vault or facility is still a viable option. While slower for recovery, it provides an air-gapped solution that’s immune to network-based attacks.

When considering offsite storage, you’ll want to think about the distance. Is it far enough away to be safe from a regional power grid failure, or even a local natural disaster? Is the connection to your offsite location reliable and secure? The goal here is to ensure business continuity, to give yourself the ability to stand back up, regardless of what havoc unfolds at your primary location. It’s peace of mind wrapped in a very smart strategic decision.

8. Don’t Forget the Edges: Protecting Your Endpoints

In the grand scheme of things, it’s easy to focus on servers and central databases, isn’t it? But often, the weakest links in your data protection chain are the devices at the very edge of your network: your endpoints. Laptops, desktops, smartphones, tablets, and even IoT devices – these are the places where data is often created, accessed, and stored, and they’re also prime targets for attackers. A single compromised laptop can be the gateway for ransomware to spread, encrypting local files and potentially reaching network drives before your central backup systems can capture the damage.

Protecting these endpoints is paramount, and it requires a multi-faceted approach:

- Up-to-Date Antivirus and Anti-malware: This is non-negotiable. Ensure every endpoint has robust, centrally managed antivirus software that’s regularly updated with the latest threat definitions. This acts as the first line of defense against known malware, viruses, and ransomware.

- Firewalls: Both host-based firewalls (on individual devices) and network firewalls are essential. They control incoming and outgoing network traffic, preventing unauthorized access and blocking malicious connections.

- Strong Passwords and Multi-Factor Authentication (MFA): Simple, weak passwords are an open invitation for hackers. Enforce complex password policies and, crucially, implement MFA wherever possible. An extra verification step, like a code from your phone, can thwart 99.9% of automated attacks.

- Regular Software Updates and Patching: Keep operating systems, applications, and firmware updated. Software vulnerabilities are constantly discovered, and patches are released to fix them. Delaying updates leaves gaping security holes that attackers eagerly exploit.

- Endpoint Detection and Response (EDR) / Extended Detection and Response (XDR) Solutions: For a more advanced defense, EDR/XDR tools offer continuous monitoring, threat detection, and automated response capabilities directly on endpoints, giving you deeper visibility and control.

- User Education: Perhaps the most critical, yet often underestimated, aspect. Employees are often the first point of contact for phishing attacks, social engineering, and other deceptive tactics. Regular training on cybersecurity best practices, how to recognize suspicious emails, and safe data handling procedures transforms your workforce into a ‘human firewall.’ Because honestly, a fully patched system with the best antivirus won’t save you if someone clicks on a malicious link they shouldn’t have.

By fortifying your endpoints, you’re not just protecting the data on those devices; you’re also safeguarding your entire network and, by extension, your backup integrity.

9. Keep an Eye on Things: Monitoring and Auditing Backup Activities

Think of your backup system as a complex machine. You wouldn’t just turn it on and walk away hoping for the best, would you? You’d want to know it’s running smoothly, isn’t overheating, and isn’t showing any warning lights. The same goes for your backup infrastructure. Continuous monitoring and regular auditing aren’t just good practices; they’re vital for maintaining the health, integrity, and security of your backups.

- Real-time Monitoring: Most professional backup solutions provide dashboards and logs that allow you to see the status of your backups in real-time. Are they completing successfully? Are there any errors or warnings? Are they running on schedule? Setting up automated alerts for failed backups, unusual activity, or storage capacity issues is absolutely critical. You want to know immediately if something goes wrong, not find out weeks later when you desperately need to restore something.

- Log Review: Beyond real-time alerts, a consistent review of backup logs is crucial. These logs can reveal patterns, recurring minor issues that might escalate, or even attempts at unauthorized access. It’s a bit like being a detective, looking for clues that something might be amiss.

- Audit Trails: Who accessed the backup system? When did they do it? What actions did they perform (e.g., initiating a restore, deleting a backup set, changing settings)? Robust audit trails provide an irrefutable record of activities, which is invaluable for security investigations, compliance requirements, and accountability. If there’s ever a question about data integrity or a potential breach, these logs become your primary source of truth.

- Integration with SIEM (Security Information and Event Management) Systems: For larger organizations, integrating backup system logs with a central SIEM platform allows for consolidated monitoring, correlation of events across different systems, and more sophisticated threat detection.

By constantly watching over your backup activities, you’re not just ensuring they’re working; you’re actively guarding against anomalies, catching potential problems early, and maintaining the ultimate trustworthiness of your data recovery capabilities.

10. Rewinding Time: The Strategic Importance of Version Control

Have you ever accidentally saved over an important document, or worse, realized days later that a critical spreadsheet had been subtly corrupted by an innocent mistake? It happens, doesn’t it? Without version control in your backup strategy, you’d be stuck with the corrupted version or the last good backup, which might be hours or even days old. This is where the magic of version control truly shines, offering you the ability to ‘rewind time’ and recover specific, uncorrupted states of your data.

Version control means maintaining multiple historical copies of your files or entire datasets. Instead of just overwriting the previous backup with the newest one, your system stores several different ‘versions’ from various points in time.

- Protection Against Gradual Corruption: Imagine a virus that slowly corrupts files over weeks before it’s detected. A simple ‘last backup only’ strategy would mean your backups are also corrupted. With version control, you can roll back to a point before the corruption began, potentially saving you from irreversible data loss.

- Ransomware Defense: This is a huge one. Ransomware encrypts your files, making them unusable. If your backups only keep the most recent copy, you might end up backing up your encrypted data! Version control allows you to go back to a clean, unencrypted version of your files from before the attack. This is a powerful countermeasure.

- Accidental Deletion/Modification: Simple human errors are incredibly common. A user accidentally deletes a critical folder, or makes a change they later regret. With version control, restoring that specific file or folder to an earlier state is often a quick and painless process.

When implementing version control, you’ll need to define your retention policies. How many versions do you need to keep? For how long?

- Short-term retention: Daily backups for the last 7 days, then weekly for the last month.

- Long-term retention: Monthly backups for the last year, yearly backups for 7 years (or as dictated by compliance requirements).

These policies should be aligned with the criticality of the data and any regulatory obligations. While maintaining many versions consumes more storage, the peace of mind and the ability to recover from a wider range of disaster scenarios, including those insidious, slow-burn issues, makes it an investment well worth making.

11. Tailoring Your Safety Net: Aligning Backup Strategy with Business Needs (RTO & RPO)

Not all data is created equal, is it? Your company’s financial records are likely far more critical than, say, a collection of old internal memos. Therefore, a ‘one-size-fits-all’ backup strategy simply won’t cut it. To build a truly effective and efficient backup plan, you must meticulously align it with your specific business needs, particularly focusing on your Recovery Time Objective (RTO) and Recovery Point Objective (RPO).

These two metrics are the bedrock of any solid disaster recovery plan:

- Recovery Time Objective (RTO): This is the maximum acceptable downtime after a disaster. How quickly must your systems and data be operational again? If your RTO for a critical system is 2 hours, then your backup and recovery strategy must be capable of restoring that system within that timeframe. For some mission-critical applications, the RTO might be minutes, requiring sophisticated, high-availability solutions. For a non-essential internal file server, an RTO of 24-48 hours might be perfectly acceptable.

- Recovery Point Objective (RPO): This defines the maximum acceptable amount of data you can afford to lose. How much data loss are you willing to tolerate, measured in time? An RPO of 1 hour means you can only afford to lose up to an hour’s worth of data. This directly dictates your backup frequency. If your RPO is 1 hour, you need to be backing up at least every hour, or even continuously. If your RPO is 24 hours, daily backups might suffice.

Data Classification is Key:

Before you can set RTOs and RPOs, you need to classify your data. Categorize it based on criticality, regulatory requirements, and business impact.

- Tier 0 (Mission Critical): Data that, if lost or unavailable for even a few minutes, would cause severe financial, reputational, or legal damage (e.g., transaction databases, customer-facing applications). These demand the lowest RTO/RPO, perhaps near-zero data loss and instantaneous recovery.

- Tier 1 (Business Critical): Data that is essential for daily operations but can tolerate a few hours of downtime or data loss (e.g., email servers, internal CRM).

- Tier 2 (Important): Data that is valuable but can tolerate longer recovery times (e.g., departmental shared drives, older project files).

- Tier 3 (Non-critical/Archival): Data that has little immediate business impact if lost or unavailable for longer periods (e.g., historical archives, non-essential logs).

Once you’ve classified your data, you can tailor your backup frequency and recovery mechanisms. Mission-critical data might warrant continuous data protection (CDP) or real-time replication. Business-critical data might get daily or hourly incremental backups. Less critical data could be backed up weekly or even monthly. This segmented approach ensures you’re investing your resources wisely, providing the highest level of protection to your most valuable assets without overspending on less critical information. It’s about smart resource allocation and effective risk management.

12. The Unbreakable Shield: Implementing Immutable Backups

In today’s cybersecurity landscape, especially with the relentless rise of ransomware, simply having backups isn’t enough. Bad actors are increasingly sophisticated, targeting your backups directly to prevent recovery and force a ransom payment. This is where immutable backups become an absolute game-changer. Think of them as backups etched in stone, completely impervious to alteration or deletion.

What does ‘immutable’ truly mean in this context? It means that once a backup is written, it cannot be changed, encrypted, deleted, or even accidentally altered for a specified period. Not by a hacker, not by ransomware, not even by an administrator with full permissions. It’s ‘write once, read many’ (WORM) storage for your backups, ensuring their integrity against even the most insidious attacks.

Here’s why immutable backups are so incredibly powerful:

- Ransomware Protection: This is their primary superpower. If ransomware encrypts your live data and then attempts to reach your backups to encrypt or delete them, it will fail. Your immutable backups remain pristine, providing a clean, uninfected recovery point. This can be the difference between paying a multi-million dollar ransom and restoring your operations quickly.

- Protection Against Insider Threats: Whether it’s a disgruntled employee attempting to sabotage data or an accidental deletion by an administrator, immutability protects against internal malicious or erroneous actions.

- Compliance and Legal Hold: Many regulatory frameworks require data to be stored in an unalterable state for specific periods. Immutable storage naturally aligns with these requirements, making compliance easier to demonstrate. For legal holds, it ensures that relevant data cannot be tampered with.

- Air-Gapped Equivalent: While not a true air gap (which is physical separation), immutable cloud storage solutions offer a logical air gap, isolating your backups from your live environment in a way that prevents them from being impacted by compromises to your primary systems.

Implementing immutable backups typically involves leveraging specific features of cloud storage providers (like S3 Object Lock for AWS, or Immutable Blob Storage for Azure) or specialized backup appliances. You define a ‘retention lock’ period, and for that duration, the backup remains unalterable. This provides an almost impenetrable layer of defense, ensuring that when the worst happens, you have a guaranteed, clean slate to rebuild from. It’s an investment that pays dividends in peace of mind and organizational resilience.

13. Your Strongest Defense: Educating and Training Employees

We’ve talked a lot about technology, haven’t we? Firewalls, encryption, automation. But even the most sophisticated technological defenses can be rendered useless by a single click from an unsuspecting employee. Human error, negligence, or lack of awareness remains one of the leading causes of data breaches and data loss. This makes your employees not just users of your systems, but your first, and often most critical, line of defense.

Investing in comprehensive and ongoing employee training isn’t just a compliance checkbox; it’s a strategic imperative. An informed and cyber-aware workforce significantly reduces your attack surface and bolsters your overall data security posture.

What should this training encompass?

- Phishing and Social Engineering Awareness: These are still the most prevalent attack vectors. Teach employees how to identify suspicious emails, malicious links, and deceptive tactics used by attackers trying to trick them into revealing credentials or clicking dangerous attachments. Real-world examples and simulated phishing tests can be incredibly effective.

- Strong Password Practices and MFA: Reinforce the importance of complex, unique passwords and the critical role of multi-factor authentication in preventing unauthorized access, even if a password is stolen.

- Safe Data Handling Procedures: Explain how to properly store, transmit, and dispose of sensitive data. Where should confidential documents be saved? How should large files be shared securely? What are the rules for using personal devices for work?

- Identifying and Reporting Suspicious Activity: Empower employees to be vigilant. If something looks or feels ‘off,’ they need to know who to report it to and how to do it immediately, without fear of reprisal. Early detection can prevent a minor incident from becoming a major crisis.

- Mobile Device Security: With more people working remotely and using personal devices, training on securing mobile endpoints (e.g., screen locks, app permissions, avoiding public Wi-Fi for sensitive tasks) is crucial.

- Regular Refreshers: Cybersecurity threats evolve constantly. One-off training isn’t enough. Regular, engaging refresher courses keep security top-of-mind and update employees on the latest threats and best practices.

Think of it this way: your IT security team builds the fortress, but your employees are the sentinels. If the sentinels aren’t trained, alert, and equipped, the fortress is vulnerable. By transforming your employees into active participants in your data protection strategy, you’re building a truly resilient organization where data loss is not just avoided, but actively defended against at every level.

Conclusion: Your Data’s Future, Secured Today

So there you have it, a comprehensive, multi-layered approach to data backup that goes far beyond just ‘copying files.’ In our increasingly digital world, where data is king and threats lurk around every corner, a robust backup strategy isn’t merely a technical task; it’s a fundamental business imperative. It’s the ultimate insurance policy, the peace of mind that allows you to innovate, grow, and operate without the constant specter of catastrophic data loss.

Remember, the goal isn’t just to have backups, it’s to have recoverable, secure, and resilient backups. From adhering to the steadfast 3-2-1 rule to empowering your human firewall, each of these best practices weaves together to form an impenetrable shield for your most valuable asset. Don’t wait until disaster strikes to realize the cracks in your armor. Be proactive, be diligent, and safeguard your digital future. After all, isn’t your data worth protecting with every tool at your disposal?

“Unshakeable” indeed! Makes me wonder, does anyone have a data backup strategy robust enough to survive the dreaded toddler test? Asking for a friend… whose cloud backups are currently battling a rogue juice box incident.

Haha, the toddler test! Now that’s a scenario not often covered in the textbooks. Perhaps we need to add a new layer to the 3-2-1 rule: the ‘childproofing protocol’. What measures would stand up to a determined toddler and their juice box? I’m curious to hear any creative solutions!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The emphasis on regularly testing backups is so important. Many organizations overlook this critical step, assuming their backups are reliable until a crisis reveals otherwise. Implementing routine restore drills can highlight vulnerabilities before they become major problems.

Absolutely! Routine restore drills are like fire drills for your data. They not only validate your backups but also reveal gaps in your recovery process. What types of restore drills have you found most effective for identifying those hidden vulnerabilities?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

A “digital fortress,” you say? Does this fortress include a moat filled with old floppy disks? Because that’s the kind of security I can get behind… or, you know, just trip over.

Haha! A floppy disk moat… that’s a security feature with a certain *vintage* charm! It also reminds us that data security is constantly evolving. What ‘moat’ strategies do you think will be most effective in the next 5-10 years?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

A “digital fortress” sounds impressive, but does it have a secret escape tunnel in case the main gate is breached? Asking for a friend who may or may not have just accidentally locked themselves out of their own fortress… hypothetically speaking, of course.

Haha, a secret escape tunnel is crucial! It’s like having a Plan B for your Plan B. Speaking of which, what’s your go-to method for quickly accessing data if the primary system is temporarily down? Always good to have a few tricks up your sleeve!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

A digital fortress, huh? But is it future-proofed against quantum computing? Asking for a friend whose data might soon be Schrödinger’s data – both secure and compromised until observed.

That’s a fantastic point! Quantum computing definitely throws a wrench into the traditional security model. While full quantum-resistant encryption is still evolving, exploring post-quantum cryptography algorithms is crucial for long-term data protection. It’s about layering defenses and preparing for the future of computing!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The concept of immutable backups as a defense against ransomware is compelling. Exploring how organizations can balance the security benefits of immutability with the need for flexibility in data management and compliance would be valuable.

That’s a great point! Balancing immutability with data management flexibility is definitely a key consideration. Perhaps organizations could implement tiered immutability, applying longer retention locks to critical data and shorter ones to less sensitive information. It’s all about finding the right equilibrium!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide emphasizes immutable backups as a defense against ransomware. Beyond ransomware, what other data integrity challenges can immutability effectively address, and how might its application evolve as data governance regulations become more stringent?

That’s a really insightful question! Immutable backups are also great for protecting against accidental data deletion or corruption from software bugs. As data governance tightens, immutability could evolve to automatically enforce data retention policies, ensuring compliance by preventing premature deletion of records. Thanks for sparking this discussion!

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide mentions the importance of RTO and RPO. How are organizations measuring the business impact of downtime and data loss to accurately determine appropriate RTO/RPO targets, especially considering less quantifiable factors like reputational damage?

That’s a really important point! Quantifying the business impact is tricky but crucial. Some organizations use historical data on revenue loss during outages, customer churn rates after data breaches, and even surveys to gauge potential reputational damage. A comprehensive risk assessment often helps tie these factors together to set realistic RTO/RPO targets. What methods have you found effective?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide highlights employee training, especially regarding phishing. Simulated phishing attacks are a great tool, and incorporating the results into personalized training modules could further strengthen this defense. Does anyone have experience implementing this type of tailored approach?

Great point about personalized training! We’ve seen some success using aggregated data from simulated phishing campaigns to identify common knowledge gaps within different departments. This allows us to create targeted modules addressing the specific vulnerabilities of each group. It’s more impactful than generic training. Has anyone else tried segmenting their training like this?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide mentions the importance of employee training. Could you elaborate on methods for continually assessing the effectiveness of security awareness programs beyond simulated phishing campaigns? How can organizations ensure knowledge translates to behavioral change over time?

That’s a great question! Beyond phishing simulations, tracking employee behavior in real-world scenarios (e.g., analyzing click-through rates on security-related communications, monitoring adherence to password policies) can provide valuable insights. Positive reinforcement, recognizing and rewarding secure behaviors, can also help solidify long-term change. What methods have you seen work well?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide emphasizes tailoring backup strategies to RTO and RPO. What methodologies do organizations employ to accurately assess and prioritize various data types according to their criticality and associated business impact?

That’s a critical question. A lot of organizations utilize a combination of interviews with key stakeholders and detailed data flow diagrams to understand the usage and dependencies of various data assets. Some also use business impact analysis workshops to quantify potential losses! It’s a detailed approach. What’s worked best in your experience?

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide highlights the importance of aligning backup strategies with RTO and RPO. Regularly reviewing and adjusting these objectives, alongside business priorities and potential impacts, is crucial for maintaining an effective and relevant data protection plan.

Thanks for highlighting that! Regularly revisiting RTO/RPO isn’t just a best practice; it’s an ongoing necessity as business landscapes evolve. How often do you recommend organizations reassess these objectives to stay aligned with changing priorities and potential impacts? It would be interesting to hear other peoples thoughts.

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide highlights classifying data based on criticality. Exploring how AI-driven tools could automate this classification process based on usage patterns and data sensitivity could streamline the alignment of backup strategies with business needs.

That’s an excellent point! AI could definitely revolutionize data classification. Imagine AI algorithms learning from employee behavior to automatically categorize data based on access frequency and handling patterns. I can see this becoming a great way of automating the process to improve backup efficiency.

Editor: StorageTech.News

Thank you to our Sponsor Esdebe

The guide highlights the importance of classifying data based on criticality to align backup strategies with business needs. Considering regulatory requirements during this data classification process further ensures compliance and minimizes potential legal ramifications.