Safeguarding Tomorrow’s Insights: A Deep Dive into the UK Data Archive

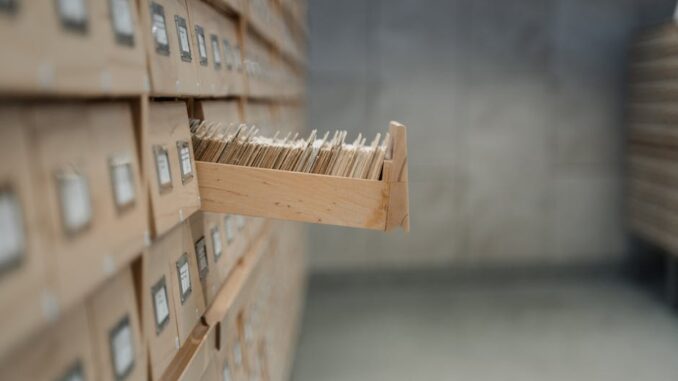

Imagine a vast, invisible library, not of dusty tomes but of digital information, meticulously cataloged and preserved for generations to come. This isn’t science fiction; it’s the reality embodied by the UK Data Archive. Standing as an indispensable cornerstone in the challenging yet crucial realm of data preservation, it plays an absolutely pivotal role in safeguarding our nation’s rich, complex data heritage. Established way back in 1967 at the University of Essex, it’s matured into the United Kingdom’s largest digital repository for social sciences and humanities data, an impressive feat if you ask me. It’s more than just a storage facility, honestly, it’s a living, breathing testament to the foresight of its founders and the ongoing dedication of its team, ensuring that yesterday’s observations can still inform tomorrow’s breakthroughs. They’ve built something truly special here, a real national treasure in the digital age. You know, back when it started, digital data was a nascent concept; the foresight to begin archiving then is something truly remarkable. We’re talking about a time when punch cards were still a thing for some folks! This long-term vision has, I’d argue, undeniably shaped our capacity for robust research and evidence-based policy for decades.

The Unwavering Core: Mission, Scope, and a Digital Renaissance

The Archive’s mission, articulated with refreshing clarity, is anything but simple: to acquire, curate, and provide access to an incredibly vast and diverse array of datasets that actively support research across an almost bewildering range of disciplines. Over the many decades since its inception, it has diligently amassed a collection that quite literally spans historical and contemporary data, embracing everything from the intricate nuances of social sciences and the hard facts of economics to the rich tapestry of humanities and so much more. But what does that really mean in practice? It’s not just about collecting files, you know. It’s about a relentless, ongoing effort to identify valuable datasets, negotiate their acquisition, then process them so they’re genuinely useful for everyone.

The Art of Acquisition: More Than Just Receiving Files

Acquiring data isn’t a passive act for the Archive; it’s a proactive, strategic process. They don’t just wait for researchers to hand over their data; rather, they actively engage with academic institutions, government bodies, and individual researchers, often long before a project even concludes. This proactive approach involves scanning the research landscape for significant studies, offering guidance on data management plans from the outset, and collaborating on grant applications to ensure proper data archiving is factored into project lifecycles. Think about it: without this foresight, countless unique datasets might simply vanish when a research project ends, a true loss for future inquiry. They also play a crucial role in advising on legal and ethical considerations surrounding data transfer, navigating the labyrinthine regulations of data protection and intellectual property, ensuring that everything they take in is above board, accessible, and compliant. It’s a hugely collaborative effort, connecting with funders and researchers alike to emphasize data stewardship as a core component of good research practice.

Curation: The Unsung Hero of Data Longevity

Once acquired, data undergoes a meticulous curation process, which, frankly, is the unsung hero of long-term data accessibility. This isn’t merely about throwing files onto a server; it’s an intricate dance of digital forensics and meticulous organization. First, there’s the critical step of data cleaning and validation, identifying and rectifying inconsistencies or errors that could skew research findings. Then, anonymization and de-identification procedures are applied, particularly to sensitive human subject data, ensuring individual privacy is rigorously protected while retaining the data’s research utility. It’s a delicate balance, one they manage with incredible expertise. This is followed by the painstaking creation of rich, standardized metadata – essentially, data about the data. This metadata is absolutely vital, describing everything from the data’s origin and methodology to its content and potential uses, making it genuinely ‘findable’ in that vast digital library we talked about earlier. Without robust metadata, even the most valuable dataset could remain effectively hidden. Lastly, data format migration is often necessary, moving data from proprietary or obsolete formats to open, sustainable ones, protecting against digital decay and ensuring future usability. It’s a constant battle against technological obsolescence, believe me. I mean, remember Zip drives? Yeah, exactly, it’s that kind of forward-thinking preservation that keeps the data relevant and usable.

Democratizing Access: Who Benefits, and How?

So, who actually gets to delve into this treasure trove? While predominantly serving academic researchers across the globe, the Archive’s reach extends much further. Policymakers frequently utilize these datasets to inform government strategies and evaluate the impact of existing interventions. Students, from undergraduates crafting their first research papers to doctoral candidates embarking on groundbreaking dissertations, find an invaluable resource for learning and discovery. Even the curious public can often access aggregated or anonymized datasets, fostering greater public understanding of social trends and scientific findings. The Archive’s tiered access system, ranging from fully open-access data to highly restricted controlled-access environments for the most sensitive information, ensures a balance between broad utility and robust privacy protection. It’s about democratizing access to knowledge, without compromising the ethical commitments researchers make.

The Evolving Landscape of Data: Beyond Numbers

What kind of data are we talking about here? It’s far more diverse than you might initially imagine. While traditionally strong in quantitative social surveys like the British Social Attitudes Survey or the UK Longitudinal Household Panel Study (Understanding Society), its scope has broadened considerably. It now includes rich qualitative datasets – think oral histories, ethnographic interviews, and textual archives – which offer nuanced insights often missed by purely numerical approaches. Official statistics, historical records stretching back centuries, geospatial data, and even born-digital administrative records are all finding a home within its servers. The evolution of data types parallels the evolution of research itself, and the Archive has impressively kept pace, adapting its methodologies and infrastructure to accommodate increasingly complex and varied information types. Seriously, the sheer variety housed there is mind-boggling; it’s a cross-section of British life and history.

The Engine Room: Data Preservation and the FAIR Principles in Action

Ensuring data remains not only preserved but genuinely accessible and usable for future generations is no small feat; it’s a monumental, ongoing challenge. The Archive addresses this by employing incredibly rigorous standards to preserve data integrity and facilitate easy, intuitive access. At its heart, guiding every decision, are the globally recognized FAIR principles—Findable, Accessible, Interoperable, and Reusable. These aren’t just buzzwords; they’re the architectural blueprint for effective digital stewardship, ensuring that data is not merely stored away, but remains a dynamic, valuable, and living resource for researchers worldwide.

Findable: Making the Invisible Visible

Before anyone can use data, they first need to know it exists and where to find it. This is where ‘Findable’ comes in. The Archive invests heavily in creating comprehensive, standardized metadata – detailed descriptions that act like a roadmap for each dataset. This metadata is consistently applied, making it discoverable through their own powerful data catalogue, and crucially, through wider national and international search portals. They use persistent identifiers, like Digital Object Identifiers (DOIs), giving each dataset a permanent, citable link, much like a book’s ISBN. This means that even if the physical location of the data changes on their servers, the DOI always points to the correct, most current version. It prevents those frustrating digital dead ends, you know? It’s all about creating an intellectual infrastructure for discovery.

Accessible: Unlocking the Gates, Safely

‘Accessible’ doesn’t necessarily mean ‘open to everyone all the time.’ Instead, it signifies that data can be accessed under clearly defined conditions. The Archive operates a sophisticated tiered access system. Some datasets are completely open, available for instant download, perfect for teaching or broad public interest. Others fall into a ‘safeguarded’ category, requiring researchers to register and agree to specific terms of use, usually for data that contains slightly more sensitive information but is still anonymized. Then, for the most granular and potentially identifiable data, there’s ‘controlled access.’ This often involves researchers working within secure data environments, sometimes even on-site at the Archive or approved remote access facilities, under strict supervision. This careful calibration ensures maximum utility while rigorously upholding ethical obligations and legal requirements, especially concerning personal data under GDPR and the Data Protection Act. It’s a delicate balancing act, one that demands constant vigilance and a robust technical infrastructure.

Interoperable: Speaking a Universal Language

‘Interoperable’ is about ensuring data can ‘talk’ to other datasets and be understood by various software applications. Think about it like different people speaking different languages; interoperability provides the translation. The Archive actively promotes the use of open, non-proprietary data formats (like CSV for tabular data, or XML for structured text) and advocates for standard terminologies and ontologies. This means researchers can combine datasets from different sources or use various analytical tools without encountering frustrating compatibility issues. It’s about building bridges, really, between different research fields and technological platforms, maximizing the potential for cross-disciplinary discovery and new insights that emerge from combining diverse data sources. We’re moving towards a world where data integration is key, and interoperability makes that possible.

Reusable: Maximizing Future Impact

Finally, ‘Reusable’ means data is prepared and documented in such a way that it can be readily utilized for future research, even by those who weren’t involved in its original collection. This is where the Archive’s exhaustive documentation truly shines. Each dataset comes with extensive guides, codebooks, methodological reports, and information on variable definitions. Clear licensing frameworks (like Creative Commons licenses) spell out exactly how the data can be used, shared, and even modified. The goal is to ensure that future researchers don’t have to reinvent the wheel or spend months deciphering cryptic files. The Archive’s long-term stewardship model actively monitors the integrity and relevance of its holdings, undertaking periodic reviews and migrations to newer formats as technology evolves. It’s about creating a lasting legacy of research utility, making sure those initial investments in data collection continue to pay dividends far into the future. Because honestly, what’s the point of collecting amazing data if no one can actually use it later on?

Why This Matters: The Profound Value Proposition

The UK Data Archive’s intricate work isn’t just an academic exercise; it carries profound implications for society. Its relentless dedication to preserving and providing access to data underpins our collective ability to understand social change, evaluate policy effectiveness, and anticipate future challenges. Without such an archive, each generation would be forced to start from scratch, losing the invaluable insights gleaned from decades of observation. Imagine trying to track the long-term impact of an educational policy without historical enrolment data, or attempting to model an economic recession without decades of market indicators. It’s simply impossible to build a robust, evidence-based understanding of the world without that longitudinal perspective.

Consider, for instance, the way long-running social surveys housed at the Archive have illuminated shifts in public attitudes towards everything from climate change to immigration. These aren’t just fleeting snapshots; they’re continuous, rolling narratives that paint a vivid picture of a changing society. Policy-makers regularly consult these trends to inform everything from healthcare strategies to urban planning. I’ve heard stories, apocryphal perhaps but illustrative, of a major government policy on social welfare being entirely re-thought after researchers, using archived data, demonstrated its unintended negative consequences over a decade. That’s the real power of this, informing decisions that affect millions of lives.

This sustained access to data fosters scientific reproducibility, a cornerstone of good research. It allows other researchers to verify findings, build upon existing work, and ask new questions of old data, often leading to entirely novel discoveries. The economic impact is also substantial; by providing a readily available, high-quality data infrastructure, the Archive reduces research costs, accelerates discovery, and ultimately contributes to innovation across sectors. It’s a fundamental pillar supporting the UK’s position as a global leader in research and innovation.

Illuminating Impact: Case Studies That Speak Volumes

The Archive’s profound impact is perhaps most vividly evident through various compelling case studies that starkly showcase its irreplaceable role in both data preservation and accessibility. These aren’t just theoretical examples; they are real-world instances where their work has enabled significant scientific and societal advancements, truly pushing the boundaries of what’s possible with robust data infrastructure.

Case Study 1: The UK Biobank – A Beacon for Public Health

The UK Biobank stands as a monumental testament to the Archive’s commitment to preserving and making accessible truly large-scale, complex datasets. It’s far more than just a collection; it’s a meticulously assembled treasure trove housing de-identified biological samples (blood, urine, saliva) and an extraordinary wealth of health-related data from an astonishing half a million individuals. These aren’t just static snapshots either; participants regularly update their health records, lifestyle information, and undergo various assessments, including cutting-edge imaging of their brain, heart, and bones. Genetic data, lifestyle choices, environmental exposures, and detailed health outcomes are all woven together into an incredibly rich tapestry.

This phenomenal resource serves as an unparalleled engine for scientific discoveries across an astonishing array of fields, significantly advancing our understanding of disease prevention, diagnosis, and treatment. Researchers worldwide, for instance, are leveraging Biobank data to uncover genetic predispositions to conditions like dementia, identify novel risk factors for cardiovascular disease, and pinpoint early indicators of various cancers. It’s become absolutely pivotal in the development of personalized medicine, allowing scientists to understand why certain treatments work for some individuals but not others. Think about the ethical tightrope walked here: gathering such sensitive data from half a million people, ensuring their anonymity while making the data scientifically useful. It’s an incredible feat of logistics, ethical oversight, and data management. It’s honestly a prime example of how big data, when handled responsibly, can fundamentally transform public health outcomes globally.

Case Study 2: Optimum Patient Care Research Database (OPCRD) – Real-World Healthcare Insights

The Optimum Patient Care Research Database (OPCRD) offers an equally compelling illustration of the Archive’s critical function, providing high-quality, de-identified data derived directly from primary care medical records across the UK. This isn’t theoretical lab data; it’s raw, real-world information reflecting the everyday health experiences of millions. The data, rigorously anonymized to safeguard patient privacy, captures a wealth of detail on diagnoses, prescriptions, referrals, and clinical measurements, providing an unparalleled longitudinal view of patient journeys within the National Health Service.

This invaluable resource profoundly supports healthcare research by offering genuine real-world data on the prevalence of chronic diseases like asthma, diabetes, and heart conditions. It helps assess the effectiveness of various treatments in routine clinical practice, often revealing insights that randomized controlled trials might miss due. Moreover, it illuminates patterns in patient outcomes, informs evidence-based care guidelines, and plays a crucial role in shaping national healthcare policy. During major public health events, like the recent pandemic, OPCRD data proved instrumental in understanding infection rates, vaccine efficacy, and the differential impact of the virus on various population groups. It’s a powerful tool for understanding how healthcare truly operates on the ground, enabling more responsive and effective public health interventions. Without it, our understanding of disease trajectories and treatment impacts would be significantly hampered; it’s literally helping to save lives through data.

Case Study 3: Unlocking Our Sound Heritage – Preserving Britain’s Acoustic Soul

‘Unlocking Our Sound Heritage’ (UOSH) represents a fascinating and deeply culturally significant UK-wide project, highlighting the Archive’s dedication to preserving something often overlooked: sound. This ambitious initiative aims to meticulously preserve, digitize, and ultimately provide public access to a substantial portion of the nation’s fragile and diverse sound heritage. We’re talking about everything from the crackle of early wax cylinders to more modern, yet equally vulnerable, magnetic tapes.

By digitizing these recordings, some dating as far back as the 1880s right up to the present day, the project ensures that unique and ephemeral sounds are secured for eternity. Imagine the loss if these irreplaceable recordings were allowed to simply degrade and disappear! This includes, but isn’t limited to, the distinct nuances of local dialects (a linguist’s dream, I tell you!), precious oral histories that capture the lived experiences of past generations, the stirring sounds of protest and folk music, and even the rare, haunting calls of endangered wildlife. The technical challenges involved are immense, requiring specialized equipment, expert audio engineers, and sophisticated digital preservation techniques to carefully transfer these often-fragile analogue formats into high-quality digital files.

This isn’t just about academic research; it’s about connecting people to their past, allowing future generations to hear the voices and sounds of their ancestors and their environment. It makes these unique sonic artifacts accessible for researchers in fields like linguistics, social history, musicology, and environmental science, all while offering immense enjoyment and cultural enrichment to the general public. It’s safeguarding the very ‘soundtrack’ of British life, ensuring that these auditory windows into history remain wide open. It really hammers home that data isn’t just numbers and text; it’s the rich, vibrant fabric of our shared human experience.

The Unseen Architects: People, Technology, and Continuous Innovation

Behind this colossal undertaking is a dedicated team of experts. We’re talking about highly skilled data scientists who wrestle with complex algorithms, meticulous archivists with an almost forensic attention to detail, legal experts who navigate the labyrinthine world of data protection, and IT professionals who maintain the robust infrastructure. Their collective expertise ensures that data is not just stored, but intelligently managed, continuously monitored, and securely accessible. The Archive’s commitment to continuous innovation is also paramount; they are constantly evaluating new technologies, from advanced storage solutions to cutting-edge AI tools for data analysis and metadata generation. This ensures they remain at the forefront of digital preservation, ready for whatever the next wave of data revolution brings. It’s a constant race against obsolescence, and they’re always in the lead, which is quite impressive when you think about it.

Looking Ahead: Challenges and the Path Forward

While the UK Data Archive has achieved so much, the landscape of data is never static. They face ongoing challenges: securing consistent funding to maintain and expand their infrastructure, grappling with the explosion of ‘big data’ from new sources like social media and the Internet of Things, and adapting to ever-evolving data protection regulations. The emergence of AI and machine learning also presents both opportunities and complexities, offering new ways to analyze and curate data, but also raising fresh ethical questions. The Archive’s journey is one of continuous adaptation, a testament to its enduring relevance in an increasingly data-driven world. Their proactive engagement with researchers and policymakers, coupled with robust training and public engagement initiatives, underscores their commitment to fostering data literacy across society. After all, what good is data if people don’t understand how to use it, or how to critically interpret its findings?

A Legacy for Tomorrow: The Indispensable Role of the UK Data Archive

The UK Data Archive’s unwavering dedication to preserving and providing access to a truly vast and ever-growing array of datasets unequivocally underscores its absolutely critical role in supporting research, informing policy, and enriching education. Through its meticulous curation, its steadfast adherence to the FAIR principles, and its innovative projects, it acts as the vigilant guardian of our collective digital memory. It ensures that invaluable data—from the granular details of individual health to the sweeping narratives of social change and the fragile echoes of our cultural past—remains a vibrant, accessible, and profoundly impactful resource for current scholars, future innovators, and generations yet to come. It’s more than just an archive; it’s a living testament to the power of shared knowledge and a vital investment in our collective future. What would we even do without it, really? The mind boggles.